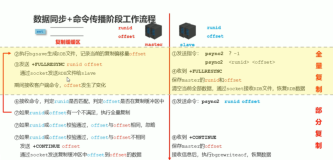

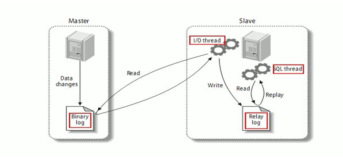

一、stop slave流程

用户线程:

stop_slave

-> terminate_slave_threads

->带入参数rpl_stop_slave_timeout设置,作为等待SQL线程退出的超时时间。

SQL:

-> 设置SQL线程终止,mi->rli->abort_slave= 1; 这个才是决定作用的

-> terminate_slave_thread SQL线程

-> 给SQL线程 pthread_kill 发送信号SIGUSR1 但是本信号已经捕捉 信号处理函数函数为空

empty_signal_handler

-> 设置thd->awake(THD::NOT_KILLED)

-> 等待SQL线程退出,有用rli->run_lock和rli->stop_cond实现,期间会2纳秒醒来判断

等待是否超过了rpl_stop_slave_timeout设置,如果超过继续下面的流程

-> if (mi->rli->flush_info(TRUE)) 强制刷replay info

IO:

->设置IO线程终止,mi->abort_slave=1;; 这个才是决定作用的

-> terminate_slave_thread IO线程

如上,只是是IO线程

-> if (mi->flush_info(TRUE)) 强制刷maseter info

-> if (mi->rli->relay_log.is_open() && mi->rli->relay_log.flush_and_sync(true))强制刷relay logSQL线程:

在循环中判断进行响应,如果mi->rli->abort_slave= 1不在继续应用Event。

DML回滚

DDL等待

以sql线程为例 在

handle_slave_sql

->sql_slave_killed 判断变量mi->rli->abort_slave是否已经被设置rollback栈帧:

#0 trx_rollback_low (trx=0x7fffd78045f0) at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/trx/trx0roll.cc:202

#1 0x0000000001bfed1c in trx_rollback_for_mysql (trx=0x7fffd78045f0) at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/trx/trx0roll.cc:289

#2 0x00000000019cbb3f in innobase_rollback (hton=0x2f2c420, thd=0x7ffe78022a10, rollback_trx=true)

at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/handler/ha_innodb.cc:5126

#3 0x0000000000f808dc in ha_rollback_low (thd=0x7ffe78022a10, all=true) at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/handler.cc:2007

#4 0x0000000001879f91 in MYSQL_BIN_LOG::rollback (this=0x2e87840, thd=0x7ffe78022a10, all=true)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/binlog.cc:2447

#5 0x0000000000f80b72 in ha_rollback_trans (thd=0x7ffe78022a10, all=true) at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/handler.cc:2094

#6 0x00000000016dd28d in trans_rollback (thd=0x7ffe78022a10) at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/transaction.cc:356

#7 0x00000000018da937 in Relay_log_info::cleanup_context (this=0x7ffe80024d30, thd=0x7ffe78022a10, error=true)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_rli.cc:1867

#8 0x00000000018c4b7f in handle_slave_sql (arg=0x7ffe8001dbe0) at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_slave.cc:7645

#9 0x0000000001945620 in pfs_spawn_thread (arg=0x7ffe800381d0) at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/perfschema/pfs.cc:2190

#10 0x00007ffff7bc6aa1 in start_thread () from /lib64/libpthread.so.0

#11 0x00007ffff6719bcd in clone () from /lib64/libc.so.6STOP SLAVE 用户线程栈帧:

Thread 9 (Thread 0x7fc266dce700 (LWP 26415)):

#0 0x00007fc266809d42 in pthread_cond_timedwait@@GLIBC_2.3.2 () from /lib64/libpthread.so.0

#1 0x0000000000e77514 in native_cond_timedwait (abstime=0x7fc266dcb860, mutex=0x7fc2223d3528, cond=0x7fc2223d3628) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/include/thr_cond.h:129

#2 my_cond_timedwait (abstime=0x7fc266dcb860, mp=0x7fc2223d3528, cond=0x7fc2223d3628) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/include/thr_cond.h:182

#3 inline_mysql_cond_timedwait (src_file=0x14e60d0 "/mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_slave.cc", src_line=1884, abstime=0x7fc266dcb860, mutex=0x7fc2223d3528, that=0x7fc2223d3628) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/include/mysql/psi/mysql_thread.h:1229

#4 terminate_slave_thread (thd=0x7fc20b40e000, term_lock=term_lock@entry=0x7fc2223d3528, term_cond=0x7fc2223d3628, slave_running=0x7fc2223d36e4, stop_wait_timeout=stop_wait_timeout@entry=0x7fc266dcb920, need_lock_term=need_lock_term@entry=false) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_slave.cc:1884

#5 0x0000000000e7a735 in terminate_slave_thread (need_lock_term=<optimized out>, stop_wait_timeout=0x7fc266dcb920, slave_running=<optimized out>, term_cond=<optimized out>, term_lock=0x7fc2223d3528, thd=<optimized out>) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_slave.cc:1698

#6 terminate_slave_threads (mi=0x7fc259bde000, thread_mask=3, stop_wait_timeout=<optimized out>, need_lock_term=<optimized out>) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_slave.cc:1698

#7 0x0000000000e87007 in stop_slave (thd=thd@entry=0x7fc20e016000, mi=0x7fc259bde000, net_report=net_report@entry=true, for_one_channel=for_one_channel@entry=false, push_temp_tables_warning=push_temp_tables_warning@entry=0x7fc266dcba10) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_slave.cc:10344

#8 0x0000000000e873ac in stop_slave (thd=thd@entry=0x7fc20e016000) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_slave.cc:605

#9 0x0000000000e874c9 in stop_slave_cmd (thd=thd@entry=0x7fc20e016000) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_slave.cc:759

#10 0x0000000000c9b759 in mysql_execute_command (thd=thd@entry=0x7fc20e016000, first_level=first_level@entry=true) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/sql_parse.cc:3618

#11 0x0000000000ca258d in mysql_parse (thd=thd@entry=0x7fc20e016000, parser_state=parser_state@entry=0x7fc266dcd020) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/sql_parse.cc:5901

#12 0x0000000000ca314d in dispatch_command (thd=thd@entry=0x7fc20e016000, com_data=com_data@entry=0x7fc266dcdc80, command=COM_QUERY) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/sql_parse.cc:1490

#13 0x0000000000ca4b27 in do_command (thd=thd@entry=0x7fc20e016000) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/sql_parse.cc:1021

#14 0x0000000000d6a430 in handle_connection (arg=arg@entry=0x7fc221fbbeb0) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/conn_handler/connection_handler_per_thread.cc:312

#15 0x0000000000eddd84 in pfs_spawn_thread (arg=0x7fc223371e20) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/perfschema/pfs.cc:2190

#16 0x00007fc266805e25 in start_thread () from /lib64/libpthread.so.0

#17 0x00007fc2649d6bad in clone () from /lib64/libc.so.6WORKER线程并不相应:

Thread 6 (Thread 0x7fc266d38700 (LWP 27759)):

#0 page_offset (ptr=0x7fc22b18c155) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/innobase/include/page0page.ic:62

#1 page_rec_is_supremum (rec=0x7fc22b18c155 "") at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/innobase/include/page0page.ic:514

#2 page_cur_is_after_last (cur=0x7fc20acb02a8) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/innobase/include/page0cur.ic:142

#3 btr_pcur_is_after_last_on_page (cursor=0x7fc20acb02a0) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/innobase/include/btr0pcur.ic:170

#4 btr_pcur_move_to_next (cursor=0x7fc20acb02a0, mtr=0x7fc266d37580) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/innobase/include/btr0pcur.ic:353

#5 0x000000000106a819 in row_search_mvcc (buf=buf@entry=0x7fc20e3e8d30 "\377\221\\", mode=PAGE_CUR_G, mode@entry=PAGE_CUR_UNSUPP, prebuilt=0x7fc20acb0088, match_mode=match_mode@entry=0, direction=direction@entry=1) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/innobase/row/row0sel.cc:6266

#6 0x0000000000f570b8 in ha_innobase::general_fetch (this=0x7fc20b069a30, buf=0x7fc20e3e8d30 "\377\221\\", direction=1, match_mode=0) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/innobase/handler/ha_innodb.cc:9854

#7 0x0000000000800fcf in handler::ha_rnd_next (this=0x7fc20b069a30, buf=0x7fc20e3e8d30 "\377\221\\") at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/handler.cc:3146

#8 0x0000000000e403c9 in Rows_log_event::do_table_scan_and_update (this=this@entry=0x7fc20872c2a0, rli=rli@entry=0x7fc20b466000) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/log_event.cc:11096

#9 0x0000000000e3fb2e in Rows_log_event::do_apply_event (this=0x7fc20872c2a0, rli=0x7fc20b466000) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/log_event.cc:11564

#10 0x0000000000e9c04f in slave_worker_exec_job_group (worker=worker@entry=0x7fc20b466000, rli=rli@entry=0x7fc2223d3000) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_rli_pdb.cc:2671

#11 0x0000000000e7f42b in handle_slave_worker (arg=arg@entry=0x7fc20b466000) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/sql/rpl_slave.cc:6239

#12 0x0000000000eddd84 in pfs_spawn_thread (arg=0x7fc20b4e1220) at /mnt/workspace/percona-server-5.7-binaries-release-rocks/label_exp/min-centos-6-x64/percona-server-5.7.22-22/storage/perfschema/pfs.cc:2190

#13 0x00007fc266805e25 in start_thread () from /lib64/libpthread.so.0

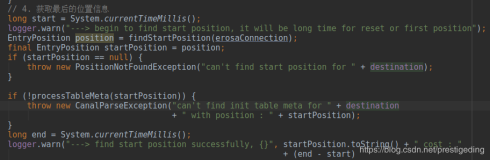

#14 0x00007fc2649d6bad in clone () from /lib64/libc.so.6二、启动流程调用

init_slave

->Rpl_info_factory::create_slave_info_objects

->scan_repositories Rpl_info_factory::scan_repositories 扫描个数 slave_master_info 通道个数

->scan_repositories Rpl_info_factory::scan_repositories 扫描个数 slave_relay_log_info 通道个数

这个函数返回是否是table类型INFO_REPOSITORY_TABLE返回通道个数

->load_channel_names_from_repository 调入函数用户读取通道名字信息加入到channel list链表

->如果是文件模式那么必须是单通道并且是默认通道,加入channel list即可 加入的是channel的名字

->如果是表模式那么直接通过表回去信息,加入channel list

->Rpl_info_factory::load_channel_names_from_table 读取表信息

内部进行循环读取每行记录加入channel的名字

->进入循环对每个channel进行操作

->Rpl_info_factory::create_mi_and_rli_objects 建立mi 和 rli 内存结构对应slave_master_info和slave_relay_log_info的通道

->Rpl_info_factory::create_mi

->Rpl_info_factory::create_rli 此处访问了slave_worker_info表的实例个数

->pchannel_map->add_mi 加入到channel_map

->load_mi_and_rli_from_repositories 从表中读取数据

->Master_info::mi_init_info 读取slave_master_info表信息

->Master_info::read_info 读取信息

->Relay_log_info::rli_init_info 读取slave_relay_log_info表信息 逻辑复杂

->初始化Retrieved_Gtid_Set 值 relay_log.init_gtid_sets relay_log_recover设置不初始化

DBUG_PRINT("info", ("Iterating forwards through relay logs, "

"updating the Retrieved_Gtid_Set and updating "

"IO thread trx parser before start."));

for (it= find(filename_list.begin(), filename_list.end(), *rit);

it != filename_list.end(); it++)//正向扫描relay log

{

const char *filename= it->c_str();

DBUG_PRINT("info", ("filename='%s'", filename));

if (read_gtids_and_update_trx_parser_from_relaylog(filename, all_gtids,

true, trx_parser,

gtid_partial_trx))

{

error= 1;

goto end;

}

}

->mysql_bin_log::set_previous_gtid_set_relaylog

->Relay_log_info::read_info读取信息

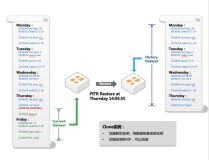

->如果recovery 打开 需要调用init_recovery根据参数recovery 判断是否做恢复 并且进行MTS恢复 使用rli 覆盖 mi 同时设置rli的读取relay位置为初始位置

MTS recovery GTID 即便是需要 恢复也会继续 覆盖,如果是位点POSTION则不能进行覆盖

->mts_recovery_groups

->MY_BITMAP *groups= &rli->recovery_groups; //在recovery 时使用的bitmap

->获取lwm的位置 为CP

LOG_POS_COORD cp=

{

(char *) rli->get_group_master_log_name(),

rli->get_group_master_log_pos()

}; //获取 检查点 位置 的LWM 为CP

->循环每一个worker

->获取 worker info的信息

->获取到 worker线程最后执行的位置 w_last

LOG_POS_COORD w_last= { const_cast<char*>(worker->get_group_master_log_name()),

worker->get_group_master_log_pos() }; //获取到 worker线程最后执行的位置

->比较cp和w_last

->如果大于 则需要恢复

->将这个woker 计入临时数组

->如果小于等于则不需要恢复

->到这里需要恢复的worker线程已经提取

->如果有需要恢复的worker线程需要初始化一个位图group

bitmap_init(groups, NULL, MTS_MAX_BITS_IN_GROUP, FALSE);

rli->recovery_groups_inited= true;//如果需要恢复

bitmap_clear_all(groups);

->循环每一个需要恢复的woker

->获取woker的w_last

->输出日志

sql_print_information("Slave: MTS group recovery relay log info based on "

"Worker-Id %lu, "

"group_relay_log_name %s, group_relay_log_pos %llu "

"group_master_log_name %s, group_master_log_pos %llu",

w->id,

w->get_group_relay_log_name(),

w->get_group_relay_log_pos(),

w->get_group_master_log_name(),

w->get_group_master_log_pos());

->获取checkpoint的 reply log name和relay log pos

if (rli->relay_log.find_log_pos(&linfo, rli->get_group_relay_log_name(), 1))

offset= rli->get_group_relay_log_pos();//读取 RELAY POS位置

my_b_seek(&log, offset); 偏移到这个位置

->从位置开始循环开始扫描每一个event

->如果是XID则代表是事务找到了

->获取当前ev的位置ev_coord

->用ev_coord和w_last进行比较

->recovery_group_cnt++说明找到了一个需要恢复的事务

->输出日志

sql_print_information("Slave: MTS group recovery relay log info "

"group_master_log_name %s, "

"event_master_log_pos %llu.",

rli->get_group_master_log_name(),

ev->common_header->log_pos);//输出日志

->如果恢复扫描完成

mts_event_coord_cmp(&ev_coord, &w_last)) == 0

->将这个woker的 位图计入到group3位图

for (uint i= (w->checkpoint_seqno + 1) - recovery_group_cnt,

j= 0; i <= w->checkpoint_seqno; i++, j++)

{

if (bitmap_is_set(&w->group_executed, i))//如果这一位 已经设置

{

DBUG_PRINT("mts", ("Setting bit %u.", j));

bitmap_fast_test_and_set(groups, j);//那么GTOUPS 这个 bitmap中应该设置 最终GTOUPS会包含全的需要恢复的事物

}

}

->设置not_reached_commit= false;//找到了结束位置

->如果没有找到结束位置

sql_print_error("Error looking for file after %s.", linfo.log_file_name);

goto err;

-> 最终比较每一个worker的recovery_group_cnt 及从CP开始的扫描到的事物数量

获取最大值为最终值 rli->mts_recovery_group_cnt

rli->mts_recovery_group_cnt= (rli->mts_recovery_group_cnt < recovery_group_cnt ?

recovery_group_cnt : rli->mts_recovery_group_cnt);//比较每个worker的recovery_group_cnt 将最大的一个 赋值给 rli->mts_recovery_group_cnt

//就是需要恢复的最大事务量

->如果有需要恢复的事务

->是GTID且有需要恢复的事务继续覆盖,后面从GTID位置开始读取恢复即可

rli->recovery_parallel_workers= 0;//不进行恢复了

rli->clear_mts_recovery_groups();//如果是GTID 清空 后面直接更具GTID进行恢复即可

->如果是位点则不能覆盖直接return。后面需要填充gap

->recover_relay_log初始化rli info binlog位置 将使用rep 库覆盖 master 库文件 同时 将初始化

->继续

-> 如果 是POS且有需要恢复的事务 MTS 调用

fill_mts_gaps_and_recover mts恢复?

->启动slave 协调线程进行恢复

->恢复完成后使用恢复到的位置打开文件relay

->进行每个通道的检测主要检查GTID参数是否和通道中的通道配置一致,不一致报错初始化参数GTID关闭但是通道是AUTO_POSITION 模式

->check_slave_sql_config_conflict进行冲突检测

-> 如果opt_slave_preserve_commit_order参数设置了,并且 slave_parallel_workers大于0

-> 如果是MTS_PARALLEL_TYPE_DB_NAME db模式 报错

-> 如果opt_bin_log或者opt_log_slave_updates没有设置报错

言外之意就是如果SLAVE_PRESERVE_COMMIT_ORDER设置了并且开启了MTS那么必须是logic模式同时 binlog和 log_slave_updates 参数必须同时开启

-> 如果slave_parallel_workers大于0 并且 SLAVE_PARALLEL_TYPE 不是logic 模式 并且是 MGR的通道

-> 那么报错

->如果没有设置opt_skip_slave_start则进行启动操作

->循环每个通道

->启动每个通道 start_slave_threads

-> 如果设置了auto_postion 但是没有开启gtid模式那么IO线程的启动将会失败

-> mts_recovery_groups 进行MTS 恢复?再学习

-> 根据是IO线程或者sql线程进行启动线程 start_slave_thread 最终会调用 handle_slave_io/handle_slave_sql三、关于repository参数设置为TABLE的栈帧

我是在MTS下面的,修改用户表栈帧

(gdb) bt

#0 row_update_for_mysql_using_upd_graph (mysql_rec=0x7ffe78014c58 "\376\062", prebuilt=0x7ffe78015100)

at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/row/row0mysql.cc:2955

#1 0x0000000001b3d6f1 in row_update_for_mysql (mysql_rec=0x7ffe78014c58 "\376\062", prebuilt=0x7ffe78015100)

at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/row/row0mysql.cc:3131

#2 0x00000000019d42ff in ha_innobase::update_row (this=0x7ffe780146d0, old_row=0x7ffe78014c58 "\376\062", new_row=0x7ffe78014c10 "\376\062")

at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/handler/ha_innodb.cc:9018

#3 0x0000000000f909cb in handler::ha_update_row (this=0x7ffe780146d0, old_data=0x7ffe78014c58 "\376\062", new_data=0x7ffe78014c10 "\376\062")

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/handler.cc:8509

#4 0x0000000001860831 in Update_rows_log_event::do_exec_row (this=0x7ffe7402f6b0, rli=0x7ffe74024a90)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/log_event.cc:13322

#5 0x0000000001858059 in Rows_log_event::do_apply_row (this=0x7ffe7402f6b0, rli=0x7ffe74024a90)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/log_event.cc:10395

#6 0x0000000001859f25 in Rows_log_event::do_table_scan_and_update (this=0x7ffe7402f6b0, rli=0x7ffe74024a90)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/log_event.cc:11137

#7 0x000000000185b0b5 in Rows_log_event::do_apply_event (this=0x7ffe7402f6b0, rli=0x7ffe74024a90)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/log_event.cc:11564

#8 0x000000000186acf2 in Log_event::do_apply_event_worker (this=0x7ffe7402f6b0, w=0x7ffe74024a90)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/log_event.cc:765

#9 0x00000000018e7672 in Slave_worker::slave_worker_exec_event (this=0x7ffe74024a90, ev=0x7ffe7402f6b0)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_rli_pdb.cc:1890

#10 0x00000000018e97ed in slave_worker_exec_job_group (worker=0x7ffe74024a90, rli=0x675e860)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_rli_pdb.cc:2671

#11 0x00000000018c077a in handle_slave_worker (arg=0x7ffe74024a90) at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_slave.cc:6239

#12 0x0000000001945620 in pfs_spawn_thread (arg=0x7ffe74029080) at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/perfschema/pfs.cc:2190

#13 0x00007ffff7bc6aa1 in start_thread () from /lib64/libpthread.so.0

#14 0x00007ffff6719bcd in clone () from /lib64/libc.so.6

(gdb) n

2956 trx_t* trx = prebuilt->trx;

(gdb) n

2957 ulint fk_depth = 0;

(gdb) n

2958 bool got_s_lock = false;

(gdb) n

2960 DBUG_ENTER("row_update_for_mysql_using_upd_graph");

(gdb) n

2962 ut_ad(trx);

(gdb) n

2963 ut_a(prebuilt->magic_n == ROW_PREBUILT_ALLOCATED);

(gdb) n

2964 ut_a(prebuilt->magic_n2 == ROW_PREBUILT_ALLOCATED);

(gdb) n

2967 if (prebuilt->table->ibd_file_missing) {

(gdb) n

2977 if(srv_force_recovery) {

(gdb) p trx->id

$1 = 350783

(gdb) p table->name->m_name

$2 = 0x7ffe780130f8 "testmts/tii"

(gdb) c

Continuing.修改slave_worker_info栈帧

Breakpoint 3, row_update_for_mysql_using_upd_graph (mysql_rec=0x7ffe7800f680 "\001", prebuilt=0x7ffe78010a50)

at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/row/row0mysql.cc:2955

2955 dict_table_t* table = prebuilt->table;

(gdb) bt

#0 row_update_for_mysql_using_upd_graph (mysql_rec=0x7ffe7800f680 "\001", prebuilt=0x7ffe78010a50)

at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/row/row0mysql.cc:2955

#1 0x0000000001b3d6f1 in row_update_for_mysql (mysql_rec=0x7ffe7800f680 "\001", prebuilt=0x7ffe78010a50)

at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/row/row0mysql.cc:3131

#2 0x00000000019d42ff in ha_innobase::update_row (this=0x7ffe7800f020, old_row=0x7ffe7800f680 "\001", new_row=0x7ffe7800f560 "\001")

at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/innobase/handler/ha_innodb.cc:9018

#3 0x0000000000f90b70 in handler::ha_update_row (this=0x7ffe7800f020, old_data=0x7ffe7800f680 "\001", new_data=0x7ffe7800f560 "\001")

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/handler.cc:8509

#4 0x00000000018f7f76 in Rpl_info_table::do_flush_info (this=0x7ffe74028cf0, force=true)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_info_table.cc:225

#5 0x00000000018d4376 in Rpl_info_handler::flush_info (this=0x7ffe74028cf0, force=true) at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_info_handler.h:94

#6 0x00000000018e3a8d in Slave_worker::flush_info (this=0x7ffe74024a90, force=true) at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_rli_pdb.cc:441

#7 0x00000000018e4a0e in Slave_worker::commit_positions (this=0x7ffe74024a90, ev=0x7ffe7402e688, ptr_g=0x7ffe7400e880, force=true)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_rli_pdb.cc:719

#8 0x000000000184e2f6 in Xid_apply_log_event::do_apply_event_worker (this=0x7ffe7402e688, w=0x7ffe74024a90)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/log_event.cc:7398

#9 0x00000000018e7672 in Slave_worker::slave_worker_exec_event (this=0x7ffe74024a90, ev=0x7ffe7402e688)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_rli_pdb.cc:1890

#10 0x00000000018e97ed in slave_worker_exec_job_group (worker=0x7ffe74024a90, rli=0x675e860)

at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_rli_pdb.cc:2671

#11 0x00000000018c077a in handle_slave_worker (arg=0x7ffe74024a90) at /root/mysqlall/percona-server-locks-detail-5.7.22/sql/rpl_slave.cc:6239

#12 0x0000000001945620 in pfs_spawn_thread (arg=0x7ffe74029080) at /root/mysqlall/percona-server-locks-detail-5.7.22/storage/perfschema/pfs.cc:2190

#13 0x00007ffff7bc6aa1 in start_thread () from /lib64/libpthread.so.0

#14 0x00007ffff6719bcd in clone () from /lib64/libc.so.6

(gdb) n

2956 trx_t* trx = prebuilt->trx;

(gdb) n

2957 ulint fk_depth = 0;

(gdb) n

2958 bool got_s_lock = false;

(gdb) n

2960 DBUG_ENTER("row_update_for_mysql_using_upd_graph");

(gdb) n

2962 ut_ad(trx);

(gdb) n

2963 ut_a(prebuilt->magic_n == ROW_PREBUILT_ALLOCATED);

(gdb) p trx->id

$3 = 350783

(gdb) p table->name->m_name

$4 = 0x7ffeac013f68 "mysql/slave_worker_info"