近年来,基于多轮检索的对话越来越受到关注。在对轮响应选择中,将当前消息和先前的话语作为输入。该模型选择一个自然且与整个上下文相关的响应。重要的是在先前的话语中识别重要信息并正确地模拟话语关系以确保会话一致性。 [48]分别通过基于RNN / LSTM的结构将上下文(所有先前的语音和当前消息的串联)和候选响应编码成上下文向量和响应向量,然后基于匹配程度得分计算在这两个载体上。 [110]选择不同策略中的先前话语,并将它们与当前消息组合以形成重新构造的上下文。

【EMNLP 2016】《Multi-view response selection for human-computer conversation》(Multi-view)

[124]不仅对一般词级上下文向量而且对话语级别上下文向量执行上下文-响应匹配。

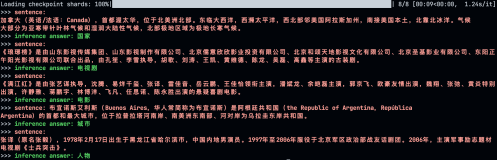

作者提供了一种直接的单轮转多轮思路——将多轮问答语句合并为一列, 连接处用_SOS_隔开, 将整个对话历史视为"一句话"去匹配下一句。将整个对话历史合并为一列, 做word embedding后通过GRU模块提取词汇级特征, 与候选的response做匹配:

不过每次直接把 word embedding sequence 输入网络得到整个多轮对话的表示(context embedding)用GRU是很难学习的,所以文中提出将每个文本也做一次匹配,用的 TextCNN+pooling+GRU结构。也就是 word-level 和 utterance-level 的结合:

【ACL 2017】【code】《Sequential matching network: A new architecture for multi-turn response selection in retrieval-based chat-bots》(SMN)

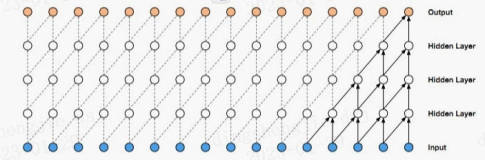

[106]通过使用卷积神经网络在多个粒度级别的上下文中对每个话语进行匹配,进一步改善了对语义关系和上下文信息的利用,然后通过时间顺序累积向量用于模拟话语之间关系的递归神经网络。

作者认为构建问答历史语句和候选回复的交互表示是重要的特征信息, 因此借鉴语义匹配中的匹配矩阵, 并结合CNN和GRU构造模型:

与Multi-view模型类似, 这里作者也考虑同事提取词汇级和语句级的特征, 分别得到两个匹配矩阵M1和M2, 具体的:

- Word-Matching-M1: 对两句话的词做word embedding, 再用dot(ei,ej)计算矩阵元素

- Utterance-Matching-M2: 对两句话的词做word embedding, 再过一层GRU提取隐状态变量h, 然后用dot(hi,A*hj)计算矩阵元素

得到的两个匹配矩阵可视为两个通道, 再传给CNN+Pooling获得一组交互特征{vi}. 交互特征传入第二个GRU层, 得到{h'i}

最后的预测层, 作者设计了三种利用隐变量{h'i}的方式:

- last: 只用最后一个h'last传入softmax计算score

- linearly combined: 将{h'i}线性加权传给softmax

- attention: 利用attention机制计算{h'i}的权重

作者的实验表明采用attention的SMN效果最好, 但训练相对最复杂, last最简单且效果基本优于linearly combined。

【COLING 2018】【code】《Modeling Multi-turn Conversation with Deep Utterance Aggregation》(DUA)

[125]来自COLING2018, 文章提出, 诸如Multi-view和SMN模型都是将对话历史视为整体, 或者说每一句对于response都是平等的, 这样做会忽略对话历史的内部特征, 例如一段对话过程经常包含多个主题; 此外一段对话中的词和句的重要性也都不同. 针对这些对话历史中的信息特征, 作者设计了下图所示的DUA模型:

- 第一部分: 通用的词向量+GRU做embedding

- 第二部分: 开始着手处理上面提到的对话历史交互问题, 首先虽然history中的多句话都对response有影响, 但最后一句通常是最关键的, 这一层的每个utterance(除最后一句)和response都和最后一句utterence做aggregation操作, aggregation操作有三种: 连接(concatenation), 元素加(element-wise summation), 元素乘(element-wise multiplication), 其中连接操作的效果最好

- 第三部分: 以utterance为单元做self-attention, 目的是过滤冗余(比如无意义的空洞词句), 提取关键信息, 由于self-attention会失去序列信息, 作者在attention上又加了一层GRU获得带顺序信息的P

- 第四部分: 完全采用SMN中构建和处理双通道匹配矩阵的策略, 用第一层的词向量和上一层的GRU输出P分别构建词汇级和语句级的匹配矩阵, 过CNN+Pooling获得交互特征

- 第五部分: 先将交互特征传给一层GUR获得隐状态h, 再对h和第三部分的GRU输出P做attention(h, P)操作, 将输出传给softmax获得预测匹配结果

【ACL 2018】【code】《Multi-Turn Response Selection for Chatbots with Deep Attention Matching Network》(DAM)

[126]对于context和response语义上的联系更进一步,将 attention 应用于多轮对话,打破之前的 RNN 和 CNN 结构,在多轮上速度快,达到了目前最好效果。其次,本文使用 self-attention 和 cross-attention 来提取 response 和 context 的特征:

- Representation

- 主要用到:self-attention 和 cross-attention

- self-attention:

-

- cross-attention:

- Utterance-Response Matching

![]()

- Aggregation

有n句utterance,Q就有有n个切片。对应n个utterance-response对的匹配矩阵, 每一片的尺寸都是(n_u_i, n_r)对应匹配矩阵中每个矩阵的尺寸

最大池的3D卷积的操作是典型的2D卷积的扩展,如图其过滤器和步幅是3D立方体,对于每个切片再进行匹配得分的计算:

![]()

![]()

释然函数:

【WSDM 2019】《Multi-Representation Fusion Network for Multi-turn Response Selection in Retrieval-based Chatbots》(MRFN)

[127]的Motivation是建立在最近几年多轮检索式对话基于的面向交互的思想。回想一下从Multi-view引入交互,到SMN完全基于交互,再到DAM多层交互.交互的粒度越多越work已经是大家的共识了。但如何更好的设计各个粒度之间的层次关系 减少不必要的性能浪费。作者提出把粒度划分为word, short-term, long-term三个粒度6种表示:

- Word

- character EMbedding: 利用字符级别的CNN(n-gram)解决typos/OOV的问题

- Word2Vec: 这里很简单的用了word2Vec 很显然用ELMo Bert等会有更好的效果 当然效率上面就不太划算

- Contextual

- Sequential: 借用GRU的结构实现句子中间子串信息的获取,RNN能保留短距离词之间的关系

- Local: 利用CNN获取N-gram的信息,CNN中卷积和池化 相对于获取中心词周围N-gram的信息

- Attention-based(和DAM一样)

- self-Attention

- cross-Attention

fusion操作其实就是把这6种representation进线融合,融合方式很简单,就是把6个矩阵连接成1个矩阵。根据fusion操作所在阶段位置的早、中、晚,可分为3个策略:

- FES

- 第1到2列,就是在做fusion

- 第2列3列,做U R 交互特征。

- 后续进行词粒度的GRU得到v,再进行utterance粒度的GRU,最后接MLP预测匹配分数

T_i^* 矩阵中的的各个向量为:

其中:

- FIE

- 先做各个representation U、R交互特征,再fusion,之后的和FES一样

- FLE

- 先做各个representation U、R交互特征,再做各个representation 词粒度的GRU 、utterance粒度的GRU,然后在MLP最后进行fusion。

实验证明了FLE效果最好:

作者还探究了多轮对话Context轮次对话长度变化时各个表示的作用占比情况:

最后结论是Contextual贡献最大,轮次少和很多的时候Contextual比Attention效果更好,轮次少的时候可能RNN系列性能的确可以和attention相抗衡,轮次多的时候可以理解为当前的回复其实更多与附近的对话相关,与较远的对话关系反而远了,所以对于局部前文信息把握更多的Contextual可能会更好。utterance越长attention的优势被证明更明显。

【arxiv 2019】《Interactive Matching Network for Multi-Turn Response Selection in Retrieval-Based Chatbots》(IMN)

[128]模型整体框架如下:

- Word Representation Layer

- 预训练词向量

- 训练集词向量

- 基于CNN的字向量

- Sentence Encoding Layer

受ELMo网络的影响,作者使用了多层BiLSTM表示多个utterance,作者将这个网络模块命名为attentive hierarchical recurrent encoder(AHRE)。将每一层BiLSTM隐含状态根据一定权重累加:

![]()

- Matching Layer

将context中的n个utterance各自的表征进行concatenation:

![]()

计算每一个utterance与候选response的匹配度:

利用匹配度信息进行utterance与response的交互并各自重构对方的表征,从而融合相互之间的匹配信息:

将匹配信息和交互信息进行融合:

![]()

![]()

将综合信息拆分成各个句子的粒度:

![]()

- Aggregation Layer

将匹配之后的信息通过BiLSTM进一步捕捉词粒度的时序关系:

![]()

![]()

做pooling,取隐状态各位的max 拼接上 最后一个隐状态

通过BiLSTM进一步捕捉utterance粒度的时序关系:

![]()

再做pooling:

![]()

拼接 c和r,最后用MLP进行分类:

![]()

引文

[1] J. Allwood, J. Nivre, and E. Ahls ́en. On the semantics and pragmatics of linguistic feedback. Journal of semantics, 9(1):1–26, 1992.

[2] R. Artstein, S. Gandhe, J. Gerten, A. Leuski, and D. Traum. Semi-formal evaluation of conversational characters. pages 22–35, 2009.

[3] N. Asghar, P. Poupart, J. Hoey, X. Jiang, and L. Mou. Affective neural response generation. arXiv preprint arXiv:1709.03968, 2017.

[4] N. Asghar, P. Poupart, X. Jiang, and H. Li. Deep ac- tive learning for dialogue generation. In Proceedings of the 6th Joint Conference on Lexical and Computa- tional Semantics (* SEM 2017), pages 78–83, 2017.

[5] D. Bahdanau, K. Cho, and Y. Bengio. Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473, 2014.

[6] L. Bahl, P. Brown, P. De Souza, and R. Mercer. Maxi- mum mutual information estimation of hidden markov model parameters for speech recognition. In Acous- tics, Speech, and Signal Processing, IEEE Interna- tional Conference on ICASSP’86., volume 11, pages 49–52. IEEE, 1986.

[7] A. Bordes, Y. L. Boureau, and J. Weston. Learning end-to-end goal-oriented dialog. In ICLR, 2017.

[8] S. R. Bowman, L. Vilnis, O. Vinyals, A. M. Dai, R. Jozefowicz, and S. Bengio. Generating sentences from a continuous space. In Proceedings of The 20th SIGNLL Conference on Computational Natural Lan- guage Learning.

[9] P. F. Brown. The acoustic-modeling problem in automatic speech recognition. Technical report, CARNEGIE-MELLON UNIV PITTSBURGH PA DEPT OF COMPUTER SCIENCE, 1987.

[10] E. Bruni and R. Ferna ́ndez. Adversarial evaluation for open-domain dialogue generation. In Proceedings of the 18th Annual SIGdial Meeting on Discourse and Dialogue, pages 284–288, 2017.

[11] K. Cao and S. Clark. Latent variable dialogue models and their diversity. In Proceedings of the 15th Confer- ence of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers, pages 182–187, Valencia, Spain, April 2017. Associa- tion for Computational Linguistics.

[12] K. Cho, B. van Merrienboer, C. Gulcehre, D. Bah- danau, F. Bougares, H. Schwenk, and Y. Bengio. Learning phrase representations using rnn encoder– decoder for statistical machine translation. In Pro- ceedings of the 2014 Conference on Empirical Meth- ods in Natural Language Processing (EMNLP), pages 1724–1734, Doha, Qatar, October 2014. Association for Computational Linguistics.

[13] S. Choudhary, P. Srivastava, L. Ungar, and J. Sedoc. Domain aware neural dialog system. arXiv preprint arXiv:1708.00897, 2017.

[14] H. Cuayhuitl, S. Keizer, and O. Lemon. Strategic dialogue management via deep reinforcement learning. arxiv.org, 2015.

[15] L. Deng, G. Tur, X. He, and D. Hakkani-Tur. Use of kernel deep convex networks and end-to-end learning for spoken language understanding. In Spoken Lan- guage Technology Workshop (SLT), 2012 IEEE, pages 210–215. IEEE, 2012.

[16] E. L. Denton, S. Chintala, R. Fergus, et al. Deep gen- erative image models using a laplacian pyramid of ad- versarial networks. In Advances in neural information processing systems, pages 1486–1494, 2015.

[17] A. Deoras and R. Sarikaya. Deep belief network based semantic taggers for spoken language understanding. In Interspeech, pages 2713–2717, 2013.

[18] B. Dhingra, L. Li, X. Li, J. Gao, Y.-N. Chen, F. Ahmed, and L. Deng. Towards end-to-end reinforce- ment learning of dialogue agents for information ac- cess. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 484–495, Vancouver, Canada, July 2017. Association for Computational Linguistics.

[19] O. Duˇsek and F. Jurcicek. A context-aware natural language generator for dialogue systems. In Proceed- ings of the 17th Annual Meeting of the Special Interest Group on Discourse and Dialogue, pages 185–190, Los Angeles, September 2016. Association for Computa- tional Linguistics.

[20] O. Duˇsek and F. Jurcicek. Sequence-to-sequence gen- eration for spoken dialogue via deep syntax trees and strings. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Vol- ume 2: Short Papers), pages 45–51, Berlin, Germany, August 2016. Association for Computational Linguis- tics.

[21] M. Eric and C. D. Manning. Key-value retrieval networks for task-oriented dialogue. arXiv preprint arXiv:1705.05414, 2017.

[22] M. Ghazvininejad, C. Brockett, M.-W. Chang, B. Dolan, J. Gao, W.-t. Yih, and M. Galley. A knowledge-grounded neural conversation model. arXiv preprint arXiv:1702.01932, 2017.

[23] D. Goddeau, H. Meng, J. Polifroni, S. Seneff, and S. Busayapongchai. A form-based dialogue manager for spoken language applications. In Spoken Language, 1996. ICSLP 96. Proceedings., Fourth International Conference on, volume 2, pages 701–704. IEEE, 1996.

[24] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Ben- gio. Generative adversarial nets. In Advances in neu- ral information processing systems, pages 2672–2680, 2014.

[25] H. B. Hashemi, A. Asiaee, and R. Kraft. Query intent detection using convolutional neural networks. In In- ternational Conference on Web Search and Data Mining, Workshop on Query Understanding, 2016.

[26] M. Henderson, B. Thomson, and S. Young. Deep neu- ral network approach for the dialog state tracking chal- lenge. In Proceedings of the SIGDIAL 2013 Confer- ence, pages 467–471, 2013.

[27] S. Hochreiter and J. Schmidhuber. Long short-term memory. Neural computation, 9(8):1735–1780, 1997.

[28] B. Hu, Z. Lu, H. Li, and Q. Chen. Convolutional neu- ral network architectures for matching natural lan- guage sentences. In Advances in neural information processing systems, pages 2042–2050, 2014.

[29] P.-S. Huang, X. He, J. Gao, L. Deng, A. Acero, and L. Heck. Learning deep structured semantic models for web search using clickthrough data. In Proceedings of the 22nd ACM international conference on Confer- ence on information & knowledge management, pages 2333–2338. ACM, 2013.

[30] Z. Ji, Z. Lu, and H. Li. An information retrieval approach to short text conversation. arXiv preprint arXiv:1408.6988, 2014.

[31] C. Kamm. User interfaces for voice applications. Proceedings of the National Academy of Sciences, 92(22):10031–10037, 1995.

[32] A. Kannan and O. Vinyals. Adversarial evaluation of dialogue models. arXiv preprint arXiv:1701.08198, 2017.

[33] D. P. Kingma, D. J. Rezende, S. Mohamed, and M. Welling. Semi-supervised learning with deep gen- erative models. Advances in Neural Information Pro- cessing Systems, 4:3581–3589, 2014.

[34] D. P. Kingma and M. Welling. Auto-encoding varia- tional bayes. In ICLR, 2014.

[35] S. Lee. Structured discriminative model for dialog state tracking. In SIGDIAL Conference, pages 442– 451, 2013.

[36] S. Lee and M. Eskenazi. Recipe for building robust spoken dialog state trackers: Dialog state tracking challenge system description. In SIGDIAL Conference, pages 414–422, 2013.

[37] M. Lewis, D. Yarats, Y. Dauphin, D. Parikh, and D. Batra. Deal or no deal? end-to-end learning of ne- gotiation dialogues. In Proceedings of the 2017 Con- ference on Empirical Methods in Natural Language Processing, pages 2433–2443, Copenhagen, Denmark, September 2017. Association for Computational Lin- guistics.

[38] J. Li, M. Galley, C. Brockett, J. Gao, and B. Dolan. A diversity-promoting objective function for neural con- versation models. In Proceedings of the 2016 Confer- ence of the North American Chapter of the Associa- tion for Computational Linguistics: Human Language Technologies, pages 110–119, San Diego, California, June 2016. Association for Computational Linguistics.

[39] J. Li, M. Galley, C. Brockett, G. Spithourakis, J. Gao, and B. Dolan. A persona-based neural conversation model. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Vol- ume 1: Long Papers), pages 994–1003, Berlin, Ger- many, August 2016. Association for Computational Linguistics.

[40] J. Li, A. H. Miller, S. Chopra, M. Ranzato, and J. We- ston. Dialogue learning with human-in-the-loop. arXiv preprint arXiv:1611.09823, 2016.

[41] J. Li, A. H. Miller, S. Chopra, M. Ranzato, and J. We- ston. Learning through dialogue interactions by asking questions. arXiv preprint, 2017.

[42] J. Li, W. Monroe, and J. Dan. A simple, fast di- verse decoding algorithm for neural generation. arXiv preprint arXiv:1611.08562, 2016.

[43] J. Li, W. Monroe, A. Ritter, D. Jurafsky, M. Galley, and J. Gao. Deep reinforcement learning for dialogue generation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pages 1192–1202, Austin, Texas, November 2016. As- sociation for Computational Linguistics.

[44] J. Li, W. Monroe, T. Shi, S. Jean, A. Ritter, and D. Jurafsky. Adversarial learning for neural dialogue generation. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pages 2147–2159, Copenhagen, Denmark, September 2017. Association for Computational Linguistics.

[45] X. Li, Y.-N. Chen, L. Li, and J. Gao. End-to-end task- completion neural dialogue systems. arXiv preprint arXiv:1703.01008, 2017.

[46] C.-W. Liu, R. Lowe, I. Serban, M. Noseworthy, L. Charlin, and J. Pineau. How not to evaluate your dialogue system: An empirical study of unsupervised evaluation metrics for dialogue response generation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pages 2122– 2132, Austin, Texas, November 2016. Association for Computational Linguistics.

[47] R. Lowe, M. Noseworthy, I. V. Serban, N. Angelard- Gontier, Y. Bengio, and J. Pineau. Towards an auto- matic turing test: Learning to evaluate dialogue re- sponses. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Vol- ume 1: Long Papers), pages 1116–1126, Vancouver, Canada, July 2017. Association for Computational Linguistics.

[48] R. Lowe, N. Pow, I. Serban, and J. Pineau. The ubuntu dialogue corpus: A large dataset for research in unstructured multi-turn dialogue systems. In Pro- ceedings of the 16th Annual Meeting of the Special In- terest Group on Discourse and Dialogue, pages 285– 294, Prague, Czech Republic, September 2015. Asso- ciation for Computational Linguistics.

[49] Z. Lu and H. Li. A deep architecture for matching short texts. In International Conference on Neural In- formation Processing Systems, pages 1367–1375, 2013.

[50] T. Luong, I. Sutskever, Q. Le, O. Vinyals, and W. Zaremba. Addressing the rare word problem in neural machine translation. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Pa- pers), pages 11–19, Beijing, China, July 2015. Associ- ation for Computational Linguistics.

[51] G. Mesnil, X. He, L. Deng, and Y. Bengio. Investi- gation of recurrent-neural-network architectures and learning methods for spoken language understanding. Interspeech, 2013.

[52] T. Mikolov, M. Karafi ́at, L. Burget, J. Cernocky`, and S. Khudanpur. Recurrent neural network based lan- guage model. In Interspeech, volume 2, page 3, 2010.

[53] T. Mikolov, I. Sutskever, K. Chen, G. S. Corrado, and J. Dean. Distributed representations of words and phrases and their compositionality. In Advances in neural information processing systems, pages 3111– 3119, 2013.

[54] A. Miller, A. Fisch, J. Dodge, A.-H. Karimi, A. Bor- des, and J. Weston. Key-value memory networks for directly reading documents. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pages 1400–1409, Austin, Texas, Novem- ber 2016. Association for Computational Linguistics.

[55] K. Mo, S. Li, Y. Zhang, J. Li, and Q. Yang. Person- alizing a dialogue system with transfer reinforcement learning. arXiv preprint, 2016.

[56] S. M ̈oller, R. Englert, K. Engelbrecht, V. Hafner, A. Jameson, A. Oulasvirta, A. Raake, and N. Rei- thinger. Memo: towards automatic usability evalua- tion of spoken dialogue services by user error simu- lations. In Ninth International Conference on Spoken Language Processing, 2006.

[57] L. Mou, Y. Song, R. Yan, G. Li, L. Zhang, and Z. Jin. Sequence to backward and forward sequences: A content-introducing approach to generative short- text conversation. In Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, pages 3349–3358, Os- aka, Japan, December 2016. The COLING 2016 Or- ganizing Committee.

[58] N. Mrkˇsi ́c, D. O ́ S ́eaghdha, B. Thomson, M. Gasic, P.- H. Su, D. Vandyke, T.-H. Wen, and S. Young. Multi- domain dialog state tracking using recurrent neural networks. In Proceedings of the 53rd Annual Meet- ing of the Association for Computational Linguistics and the 7th International Joint Conference on Nat- ural Language Processing (Volume 2: Short Papers), pages 794–799, Beijing, China, July 2015. Association for Computational Linguistics.

[59] N. Mrkˇsi ́c, D. O ́ S ́eaghdha, T.-H. Wen, B. Thomson, and S. Young. Neural belief tracker: Data-driven dia- logue state tracking. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1777–1788, Van- couver, Canada, July 2017. Association for Computa- tional Linguistics.

[60] N. Papernot, M. Abadi, U ́. Erlingsson, I. Goodfellow, and K. Talwar. Semi-supervised knowledge transfer for deep learning from private training data. ICLR, 2017.

[61] Q. Qian, M. Huang, H. Zhao, J. Xu, and X. Zhu. Assigning personality/identity to a chatting machine for coherent conversation generation. arXiv preprint arXiv:1706.02861, 2017.

[62] M. Qiu, F.-L. Li, S. Wang, X. Gao, Y. Chen, W. Zhao, H. Chen, J. Huang, and W. Chu. Alime chat: A se- quence to sequence and rerank based chatbot engine. In Proceedings of the 55th Annual Meeting of the As- sociation for Computational Linguistics (Volume 2: Short Papers), volume 2, pages 498–503, 2017.

[63] H. Ren, W. Xu, Y. Zhang, and Y. Yan. Dialog state tracking using conditional random fields. In SIGDIAL Conference, pages 457–461, 2013.

[64] A. Ritter, C. Cherry, and W. B. Dolan. Data-driven response generation in social media. In Conference on Empirical Methods in Natural Language Processing, pages 583–593, 2011.

[65] G. Salton and C. Buckley. Term-weighting approaches in automatic text retrieval. Information Processing & Management, 24(5):513–523, 1988.

[66] R. Sarikaya, G. E. Hinton, and B. Ramabhadran. Deep belief nets for natural language call-routing. In IEEE International Conference on Acoustics, Speech and Signal Processing, pages 5680–5683, 2011.

[67] I. Serban, T. Klinger, G. Tesauro, K. Talamadupula, B. Zhou, Y. Bengio, and A. Courville. Multiresolution recurrent neural networks: An application to dialogue response generation. In AAAI Conference on Artificial Intelligence, 2017.

[68] I. Serban, A. Sordoni, Y. Bengio, A. Courville, and J. Pineau. Building end-to-end dialogue systems us- ing generative hierarchical neural network models. In AAAI Conference on Artificial Intelligence, 2016.

[69] I. Serban, A. Sordoni, R. Lowe, L. Charlin, J. Pineau, A. Courville, and Y. Bengio. A hierarchical latent vari- able encoder-decoder model for generating dialogues. In AAAI Conference on Artificial Intelligence, 2017.

[70] I. V. Serban, C. Sankar, M. Germain, S. Zhang, Z. Lin, S. Subramanian, T. Kim, M. Pieper, S. Chandar, N. R. Ke, et al. A deep reinforcement learning chatbot. arXiv preprint arXiv:1709.02349, 2017.

[71] L. Shang, Z. Lu, and H. Li. Neural responding machine for short-text conversation. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Pa- pers), pages 1577–1586, Beijing, China, July 2015. As- sociation for Computational Linguistics.

[72] L. Shao, S. Gouws, D. Britz, A. Goldie, and B. Strope. Generating long and diverse responses with neural conversation models. arXiv preprint arXiv:1701.03185, 2017.

[73] X. Shen, H. Su, Y. Li, W. Li, S. Niu, Y. Zhao, A. Aizawa, and G. Long. A conditional variational framework for dialog generation. In Proceedings of the 55th Annual Meeting of the Association for Compu- tational Linguistics (Volume 2: Short Papers), pages 504–509, Vancouver, Canada, July 2017. Association for Computational Linguistics.

[74] Y. Shen, X. He, J. Gao, L. Deng, and G. Mesnil. Learning semantic representations using convolutional neural networks for web search. In Proceedings of the 23rd International Conference on World Wide Web, pages 373–374. ACM, 2014.

[75] K. Sohn, X. Yan, and H. Lee. Learning structured out- put representation using deep conditional generative models. In International Conference on Neural Infor- mation Processing Systems, pages 3483–3491, 2015.

[76] Y. Song, R. Yan, X. Li, D. Zhao, and M. Zhang. Two are better than one: An ensemble of retrieval- and generation-based dialog systems. arXiv preprint arXiv:1610.07149, 2016.

[77] A. Sordoni, M. Galley, M. Auli, C. Brockett, Y. Ji, M. Mitchell, J.-Y. Nie, J. Gao, and B. Dolan. A neu- ral network approach to context-sensitive generation of conversational responses. In Proceedings of the 2015 Conference of the North American Chapter of the As- sociation for Computational Linguistics: Human Lan- guage Technologies, pages 196–205, Denver, Colorado, May–June 2015. Association for Computational Lin- guistics.

[78] A. Stent, M. Marge, and M. Singhai. Evaluating eval- uation methods for generation in the presence of vari- ation. In International Conference on Computational Linguistics and Intelligent Text Processing, pages 341– 351, 2005.

[79] A. Stent, R. Prasad, and M. Walker. Trainable sen- tence planning for complex information presentation in spoken dialog systems. In Proceedings of the 42nd annual meeting on association for computational lin- guistics, page 79. Association for Computational Lin- guistics, 2004.

[80] P.-H. Su, D. Vandyke, M. Gasic, D. Kim, N. Mrksic, T.-H. Wen, and S. Young. Learning from real users: Rating dialogue success with neural networks for rein- forcement learning in spoken dialogue systems. arXiv preprint arXiv:1508.03386, 2015.

[81] C. Tao, L. Mou, D. Zhao, and R. Yan. Ru- ber: An unsupervised method for automatic evalu- ation of open-domain dialog systems. arXiv preprint arXiv:1701.03079, 2017.

[82] Z. Tian, R. Yan, L. Mou, Y. Song, Y. Feng, and D. Zhao. How to make context more useful? an em- pirical study on context-aware neural conversational models. In Meeting of the Association for Computa- tional Linguistics, pages 231–236, 2017.

[83] V. K. Tran and L. M. Nguyen. Semantic refinement gru-based neural language generation for spoken dia- logue systems. In PACLING, 2017.

[84] G. Tur, L. Deng, D. Hakkani-Tu ̈r, and X. He. Towards deeper understanding: Deep convex networks for se- mantic utterance classification. In Acoustics, Speech and Signal Processing (ICASSP), 2012 IEEE Interna- tional Conference on, pages 5045–5048. IEEE, 2012.

[85] A. M. Turing. Computing machinery and intelligence. Mind, 59(236):433–460, 1950.

[86] A. K. Vijayakumar, M. Cogswell, R. R. Selvaraju, Q. Sun, S. Lee, D. Crandall, and D. Batra. Diverse beam search: Decoding diverse solutions from neu- ral sequence models. arXiv preprint arXiv:1610.02424, 2016.

[93]Z. Wang and O. Lemon. A simple and generic belief tracking mechanism for the dialog state tracking chal- lenge: On the believability of observed information. In SIGDIAL Conference, pages 423–432, 2013.

[98] J. Williams. Multi-domain learning and generaliza- tion in dialog state tracking. In SIGDIAL Conference, pages 433–441, 2013.

[99] J. Williams, A. Raux, D. Ramachandran, and A. Black. The dialog state tracking challenge. In Pro- ceedings of the SIGDIAL 2013 Conference, pages 404– 413, 2013.

[100] J. D. Williams. A belief tracking challenge task for spoken dialog systems. In NAACL-HLT Workshop on Future Directions and Needs in the Spoken Dialog Community: Tools and Data, pages 23–24, 2012.

[101] J. D. Williams. Web-style ranking and slu combination for dialog state tracking. In SIGDIAL Conference, pages 282–291, 2014.

[106]Y. Wu, W. Wu, C. Xing, M. Zhou, and Z. Li. Sequential matching network: A new architecture for multi-turn response selection in retrieval-based chat-bots. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 496–505, Vancouver, Canada, July 2017. Association for Computational Linguistics.

[108]C. Xing, W. Wu, Y. Wu, J. Liu, Y. Huang, M. Zhou, and W.-Y. Ma. Topic aware neural response genera- tion. In AAAI Conference on Artificial Intelligence, 2017.

[110]R. Yan, Y. Song, and H. Wu. Learning to respond with deep neural networks for retrieval-based human- computer conversation system. In Proceedings of the 39th International ACM SIGIR Conference on Re- search and Development in Information Retrieval, SI- GIR ’16, pages 55–64, New York, NY, USA, 2016. ACM.

[111]Z. Yan, N. Duan, P. Chen, M. Zhou, J. Zhou, and Z. Li. Building task-oriented dialogue systems for on- line shopping. In AAAI Conference on Artificial Intelligence, 2017.

[113]K. Yao, B. Peng, Y. Zhang, D. Yu, G. Zweig, and Y. Shi. Spoken language understanding using long short-term memory neural networks. In IEEE Insti- tute of Electrical & Electronics Engineers, pages 189 – 194, 2014.

[115]K. Yao, G. Zweig, M. Y. Hwang, Y. Shi, and D. Yu. Recurrent neural networks for language understand- ing. In Interspeech, 2013.

[119]W. Zhang, T. Liu, Y. Wang, and Q. Zhu. Neural personalized response generation as domain adaptation. arXiv preprint arXiv:1701.02073, 2017.

[122]H. Zhou, M. Huang, T. Zhang, X. Zhu, and B. Liu. Emotional chatting machine: Emotional conversation generation with internal and external memory. arXiv preprint arXiv:1704.01074, 2017.

[123]H. Zhou, M. Huang, and X. Zhu. Context-aware nat- ural language generation for spoken dialogue systems. In COLING, pages 2032–2041, 2016.

[124]X. Zhou, D. Dong, H. Wu, S. Zhao, D. Yu, H. Tian, X. Liu, and R. Yan. Multi-view response selection for human-computer conversation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pages 372–381, Austin, Texas, November 2016. Association for Computational Lin- guistics.

[125]Zhang Z , Li J , Zhu P , et al. Modeling Multi-turn Conversation with Deep Utterance Aggregation[J]. 2018.Association for Computational Linguistics.

[126]Multi-Turn Response Selection for Chatbots with Deep Attention Matching Network. Xiangyang Zhou, Lu Li, Daxiang Dong, Yi Liu, Ying Chen, Wayne Xin Zhao, Dianhai Yu, Hua Wu. P18-1103. Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2018.

[127]Multi-Representation Fusion Network for Multi-turn Response Selection in Retrieval-based Chatbots. Chongyang Tao et al. WSDM2019.

[128]Interactive Matching Network for Multi-Turn Response Selection in Retrieval-Based Chatbots. Jia-Chen Gu et al. 2019

[129]Xueliang Zhao, Chongyang Tao, Wei Wu, Can Xu, Dongyan Zhao and Rui Yan. A Document-grounded Matching Network for Response Selection in Retrieval-based Chatbots.