OSD.png

工作中需要从 Ceph 的集群中移除一台存储服务器,挪作他用。Ceph 存储空间即使在移除该存储服务器后依旧够用,所以操作是可行的,但集群已经运行了很长时间,每个服务器上都存储了很多数据,在数据无损的情况下移除,看起来也不简单。

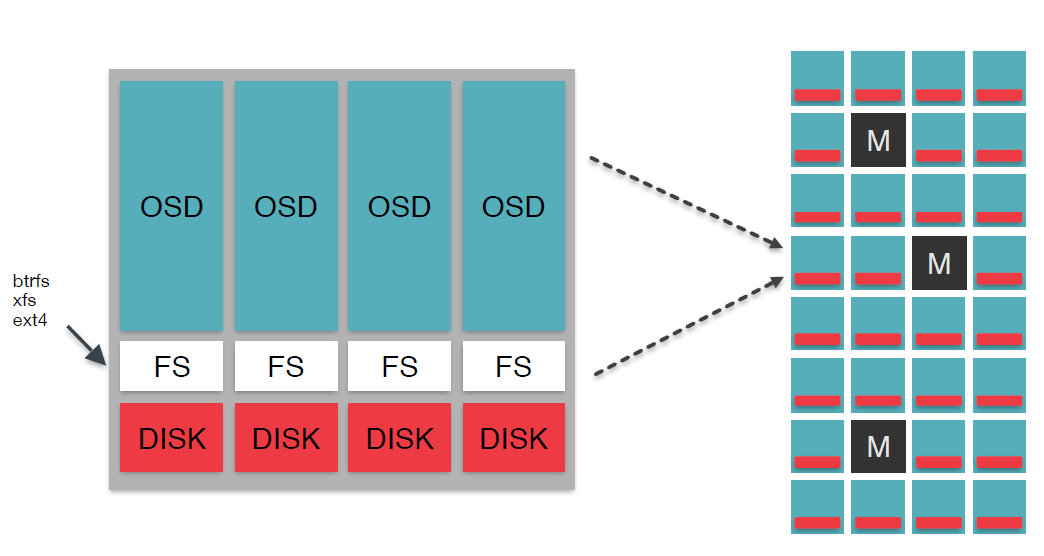

1. OSD 布局

先来看看 OSD 的布局

$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 265.25757 root default

-5 132.62878 host osd7

24 hdd 5.52620 osd.24 up 1.00000 1.00000

25 hdd 5.52620 osd.25 up 1.00000 1.00000

26 hdd 5.52620 osd.26 up 1.00000 1.00000

27 hdd 5.52620 osd.27 up 1.00000 1.00000

28 hdd 5.52620 osd.28 up 1.00000 1.00000

29 hdd 5.52620 osd.29 up 1.00000 1.00000

30 hdd 5.52620 osd.30 up 1.00000 1.00000

31 hdd 5.52620 osd.31 up 1.00000 1.00000

32 hdd 5.52620 osd.32 up 1.00000 1.00000

33 hdd 5.52620 osd.33 up 1.00000 1.00000

34 hdd 5.52620 osd.34 up 1.00000 1.00000

35 hdd 5.52620 osd.35 up 1.00000 1.00000

36 hdd 5.52620 osd.36 up 1.00000 1.00000

37 hdd 5.52620 osd.37 up 1.00000 1.00000

38 hdd 5.52620 osd.38 up 1.00000 1.00000

39 hdd 5.52620 osd.39 up 1.00000 1.00000

40 hdd 5.52620 osd.40 up 1.00000 1.00000

41 hdd 5.52620 osd.41 up 1.00000 1.00000

42 hdd 5.52620 osd.42 up 1.00000 1.00000

43 hdd 5.52620 osd.43 up 1.00000 1.00000

44 hdd 5.52620 osd.44 up 1.00000 1.00000

45 hdd 5.52620 osd.45 up 1.00000 1.00000

46 hdd 5.52620 osd.46 up 1.00000 1.00000

47 hdd 5.52620 osd.47 up 1.00000 1.00000

-3 132.62878 host osd8

0 hdd 5.52620 osd.0 up 1.00000 1.00000

1 hdd 5.52620 osd.1 up 1.00000 1.00000

2 hdd 5.52620 osd.2 up 1.00000 1.00000

3 hdd 5.52620 osd.3 up 1.00000 1.00000

4 hdd 5.52620 osd.4 up 1.00000 1.00000

5 hdd 5.52620 osd.5 up 1.00000 1.00000

6 hdd 5.52620 osd.6 up 1.00000 1.00000

7 hdd 5.52620 osd.7 up 1.00000 1.00000

8 hdd 5.52620 osd.8 up 1.00000 1.00000

9 hdd 5.52620 osd.9 up 1.00000 1.00000

10 hdd 5.52620 osd.10 up 1.00000 1.00000

11 hdd 5.52620 osd.11 up 1.00000 1.00000

12 hdd 5.52620 osd.12 up 1.00000 1.00000

13 hdd 5.52620 osd.13 up 1.00000 1.00000

14 hdd 5.52620 osd.14 up 1.00000 1.00000

15 hdd 5.52620 osd.15 up 1.00000 1.00000

16 hdd 5.52620 osd.16 up 1.00000 1.00000

17 hdd 5.52620 osd.17 up 1.00000 1.00000

18 hdd 5.52620 osd.18 up 1.00000 1.00000

19 hdd 5.52620 osd.19 up 1.00000 1.00000

20 hdd 5.52620 osd.20 up 1.00000 1.00000

21 hdd 5.52620 osd.21 up 1.00000 1.00000

22 hdd 5.52620 osd.22 up 1.00000 1.00000

23 hdd 5.52620 osd.23 up 1.00000 1.00000

一共两台服务器,48 个 OSD。需要把 osd8 移除,那么就需要把上面的所有的 24 个 OSD 全部删除。

2. 单个 OSD 进程删除流程

以移除 osd.0 为例看一下移除 OSD 的流程:

2.1 将状态设置成 out

首先要现将 OSD 状态设置成 out。

$ ceph osd out 0

marked out osd.0.

这个阶段 ceph 会自动将处于 out 状态 OSD 中的数据迁移到其他状态正常的 OSD 上,所以在执行完成后,需要使用 ceph -w 查看数据迁移流程。等到不再有输出后,数据迁移完毕。

$ ceph -w

cluster:

id: 063ed8d6-fc89-4fcb-8811-ff23915983e7

health: HEALTH_ERR

12408/606262 objects misplaced (2.047%)

6 scrub errors

Reduced data availability: 2 pgs peering

Possible data damage: 5 pgs inconsistent

application not enabled on 7 pool(s)

services:

mon: 3 daemons, quorum dell1,dell2,dell3

mgr: dell1(active)

mds: cephfs-1/1/1 up {0=dell1=up:active}, 2 up:standby

osd: 48 osds: 48 up, 47 in; 44 remapped pgs

rgw: 3 daemons active

data:

pools: 22 pools, 1816 pgs

objects: 296k objects, 963 GB

usage: 5222 GB used, 254 TB / 259 TB avail

pgs: 0.220% pgs not active

12408/606262 objects misplaced (2.047%)

1763 active+clean

29 active+remapped+backfill_wait

14 active+remapped+backfilling

5 active+clean+inconsistent

3 peering

1 active+recovery_wait

1 activating+remapped

io:

client: 59450 kB/s rd, 4419 MB/s wr, 1095 op/s rd, 2848 op/s wr

recovery: 253 MB/s, 210 keys/s, 123 objects/s

2018-07-05 14:21:07.867104 mon.dell1 [WRN] Health check failed: Degraded data redundancy: 7/605732 objects degraded (0.001%), 1 pg degraded (PG_DEGRADED)

2018-07-05 14:21:12.252395 mon.dell1 [INF] Health check cleared: PG_DEGRADED (was: Degraded data redundancy: 7/605732 objects degraded (0.001%), 1 pg degraded)

2018-07-05 14:21:13.510741 mon.dell1 [WRN] Health check update: 12269/606262 objects misplaced (2.024%) (OBJECT_MISPLACED)

2018-07-05 14:21:13.510797 mon.dell1 [INF] Health check cleared: PG_AVAILABILITY (was: Reduced data availability: 2 pgs peering)

2018-07-05 14:21:19.488864 mon.dell1 [WRN] Health check update: 11553/606262 objects misplaced (1.906%) (OBJECT_MISPLACED)

2018-07-05 14:21:25.502619 mon.dell1 [WRN] Health check update: 10504/606262 objects misplaced (1.733%) (OBJECT_MISPLACED)

2018-07-05 14:21:31.745600 mon.dell1 [WRN] Health check update: 10091/606262 objects misplaced (1.664%) (OBJECT_MISPLACED)

2018-07-05 14:21:36.779666 mon.dell1 [WRN] Health check update: 9309/606262 objects misplaced (1.535%) (OBJECT_MISPLACED)

2018-07-05 14:21:41.779947 mon.dell1 [WRN] Health check update: 8580/606262 objects misplaced (1.415%) (OBJECT_MISPLACED)

2018-07-05 14:21:46.816584 mon.dell1 [WRN] Health check update: 8215/606262 objects misplaced (1.355%) (OBJECT_MISPLACED)

2018-07-05 14:21:51.817014 mon.dell1 [WRN] Health check update: 7331/606262 objects misplaced (1.209%) (OBJECT_MISPLACED)

2018-07-05 14:21:56.817406 mon.dell1 [WRN] Health check update: 6929/606262 objects misplaced (1.143%) (OBJECT_MISPLACED)

2018-07-05 14:22:01.817820 mon.dell1 [WRN] Health check update: 6426/606262 objects misplaced (1.060%) (OBJECT_MISPLACED)

2018-07-05 14:22:06.818188 mon.dell1 [WRN] Health check update: 5787/606262 objects misplaced (0.955%) (OBJECT_MISPLACED)

2018-07-05 14:22:11.818606 mon.dell1 [WRN] Health check update: 5429/606262 objects misplaced (0.895%) (OBJECT_MISPLACED)

2018-07-05 14:22:16.818981 mon.dell1 [WRN] Health check update: 5165/606262 objects misplaced (0.852%) (OBJECT_MISPLACED)

2018-07-05 14:22:20.303513 osd.35 [ERR] 13.2ad missing primary copy of 13:b56abc11:::d9593962-fa39-406f-bc35-7e4fcac1be9f.44307.2__shadow_121_1530008810116503747%2fplatform-cms.rar.2~vf7AEOlGNYM1ggI6IhV-iu22oDDXcvS.5_1:head, will try copies on 0

2018-07-05 14:22:21.819353 mon.dell1 [WRN] Health check update: 4866/606262 objects misplaced (0.803%) (OBJECT_MISPLACED)

2018-07-05 14:22:26.819657 mon.dell1 [WRN] Health check update: 4586/606262 objects misplaced (0.756%) (OBJECT_MISPLACED)

2018-07-05 14:22:31.819983 mon.dell1 [WRN] Health check update: 4323/606262 objects misplaced (0.713%) (OBJECT_MISPLACED)

2018-07-05 14:22:36.820335 mon.dell1 [WRN] Health check update: 4113/606262 objects misplaced (0.678%) (OBJECT_MISPLACED)

2018-07-05 14:22:41.820676 mon.dell1 [WRN] Health check update: 3949/606262 objects misplaced (0.651%) (OBJECT_MISPLACED)

2018-07-05 14:22:46.821040 mon.dell1 [WRN] Health check update: 3788/606262 objects misplaced (0.625%) (OBJECT_MISPLACED)

2018-07-05 14:22:51.821395 mon.dell1 [WRN] Health check update: 3665/606262 objects misplaced (0.605%) (OBJECT_MISPLACED)

2018-07-05 14:22:56.821692 mon.dell1 [WRN] Health check update: 3440/606262 objects misplaced (0.567%) (OBJECT_MISPLACED)

2018-07-05 14:23:01.821999 mon.dell1 [WRN] Health check update: 3170/606266 objects misplaced (0.523%) (OBJECT_MISPLACED)

2018-07-05 14:23:06.822355 mon.dell1 [WRN] Health check update: 2956/606266 objects misplaced (0.488%) (OBJECT_MISPLACED)

2018-07-05 14:23:11.822752 mon.dell1 [WRN] Health check update: 2747/606270 objects misplaced (0.453%) (OBJECT_MISPLACED)

2018-07-05 14:23:16.823168 mon.dell1 [WRN] Health check update: 2615/606270 objects misplaced (0.431%) (OBJECT_MISPLACED)

2018-07-05 14:23:21.823523 mon.dell1 [WRN] Health check update: 2512/606270 objects misplaced (0.414%) (OBJECT_MISPLACED)

2018-07-05 14:23:26.823878 mon.dell1 [WRN] Health check update: 2409/606270 objects misplaced (0.397%) (OBJECT_MISPLACED)

2018-07-05 14:23:31.824214 mon.dell1 [WRN] Health check update: 2299/606270 objects misplaced (0.379%) (OBJECT_MISPLACED)

2018-07-05 14:23:36.824596 mon.dell1 [WRN] Health check update: 2194/606270 objects misplaced (0.362%) (OBJECT_MISPLACED)

2018-07-05 14:23:41.825037 mon.dell1 [WRN] Health check update: 2101/606270 objects misplaced (0.347%) (OBJECT_MISPLACED)

2018-07-05 14:23:46.825390 mon.dell1 [WRN] Health check update: 1939/606270 objects misplaced (0.320%) (OBJECT_MISPLACED)

2018-07-05 14:23:51.825725 mon.dell1 [WRN] Health check update: 1777/606270 objects misplaced (0.293%) (OBJECT_MISPLACED)

2018-07-05 14:23:56.826087 mon.dell1 [WRN] Health check update: 1612/606270 objects misplaced (0.266%) (OBJECT_MISPLACED)

2018-07-05 14:24:01.826439 mon.dell1 [WRN] Health check update: 1444/606270 objects misplaced (0.238%) (OBJECT_MISPLACED)

2018-07-05 14:24:06.826755 mon.dell1 [WRN] Health check update: 1315/606270 objects misplaced (0.217%) (OBJECT_MISPLACED)

2018-07-05 14:24:11.828343 mon.dell1 [WRN] Health check update: 1264/606270 objects misplaced (0.208%) (OBJECT_MISPLACED)

2018-07-05 14:24:16.828638 mon.dell1 [WRN] Health check update: 1214/606270 objects misplaced (0.200%) (OBJECT_MISPLACED)

2018-07-05 14:24:21.886644 mon.dell1 [WRN] Health check update: 1161/606270 objects misplaced (0.191%) (OBJECT_MISPLACED)

2018-07-05 14:24:26.887027 mon.dell1 [WRN] Health check update: 1110/606270 objects misplaced (0.183%) (OBJECT_MISPLACED)

2018-07-05 14:24:32.287725 mon.dell1 [WRN] Health check update: 1069/606270 objects misplaced (0.176%) (OBJECT_MISPLACED)

2018-07-05 14:24:39.839578 mon.dell1 [WRN] Health check update: 960/606270 objects misplaced (0.158%) (OBJECT_MISPLACED)

2018-07-05 14:24:45.851276 mon.dell1 [WRN] Health check update: 905/606272 objects misplaced (0.149%) (OBJECT_MISPLACED)

2018-07-05 14:24:51.911053 mon.dell1 [WRN] Health check update: 849/606272 objects misplaced (0.140%) (OBJECT_MISPLACED)

2018-07-05 14:24:57.960803 mon.dell1 [WRN] Health check update: 784/606272 objects misplaced (0.129%) (OBJECT_MISPLACED)

2018-07-05 14:25:05.887641 mon.dell1 [WRN] Health check update: 688/606272 objects misplaced (0.113%) (OBJECT_MISPLACED)

2018-07-05 14:25:11.945922 mon.dell1 [WRN] Health check update: 631/606272 objects misplaced (0.104%) (OBJECT_MISPLACED)

2018-07-05 14:25:16.946267 mon.dell1 [WRN] Health check update: 570/606272 objects misplaced (0.094%) (OBJECT_MISPLACED)

2018-07-05 14:25:21.993994 mon.dell1 [WRN] Health check update: 528/606272 objects misplaced (0.087%) (OBJECT_MISPLACED)

2018-07-05 14:25:26.994417 mon.dell1 [WRN] Health check update: 468/606272 objects misplaced (0.077%) (OBJECT_MISPLACED)

2018-07-05 14:25:31.994789 mon.dell1 [WRN] Health check update: 411/606272 objects misplaced (0.068%) (OBJECT_MISPLACED)

2018-07-05 14:25:36.995192 mon.dell1 [WRN] Health check update: 353/606272 objects misplaced (0.058%) (OBJECT_MISPLACED)

2018-07-05 14:25:42.009567 mon.dell1 [WRN] Health check update: 293/606272 objects misplaced (0.048%) (OBJECT_MISPLACED)

2018-07-05 14:25:47.009879 mon.dell1 [WRN] Health check update: 241/606272 objects misplaced (0.040%) (OBJECT_MISPLACED)

2018-07-05 14:25:52.010822 mon.dell1 [WRN] Health check update: 187/606272 objects misplaced (0.031%) (OBJECT_MISPLACED)

2018-07-05 14:25:57.011182 mon.dell1 [WRN] Health check update: 133/606272 objects misplaced (0.022%) (OBJECT_MISPLACED)

2018-07-05 14:26:02.035637 mon.dell1 [WRN] Health check update: 78/606272 objects misplaced (0.013%) (OBJECT_MISPLACED)

2018-07-05 14:26:07.035965 mon.dell1 [WRN] Health check update: 22/606272 objects misplaced (0.004%) (OBJECT_MISPLACED)

2018-07-05 14:26:12.011546 mon.dell1 [INF] Health check cleared: OBJECT_MISPLACED (was: 22/606272 objects misplaced (0.004%))

2.2 PG 修复

但不是数据迁移结束后就万事大吉了,可以通过下面这个命令看到,数据迁移后,有五个 pg 状态不正常,需要修复。

$ ceph health detail

HEALTH_ERR 6 scrub errors; Possible data damage: 5 pgs inconsistent

OSD_SCRUB_ERRORS 6 scrub errors

PG_DAMAGED Possible data damage: 5 pgs inconsistent

pg 13.cd is active+clean+inconsistent, acting [20,35]

pg 13.244 is active+clean+inconsistent, acting [35,22]

pg 13.270 is active+clean+inconsistent, acting [35,14]

pg 13.308 is active+clean+inconsistent, acting [35,17]

pg 13.34f is active+clean+inconsistent, acting [11,35]

执行 repair 命令来修复,如果还是不成功,可以使用 scrub 来进行数据清理。

$ ceph pg repair 13.cd

$ ceph pg scrub 13.cd

2.3 关闭 OSD 进程

数据迁移至此算是完成了,但 osd 进程还是跑着的。

0 hdd 5.52620 osd.0 up 0 1.00000

接下来需要登录到 OSD 服务器上关闭掉该进程。

$ ssh osd8

$ sudo systemctl stop ceph-osd@0

现在 osd 进程的状态已经已经是 down 了。

0 hdd 5.52620 osd.0 down 0 1.00000

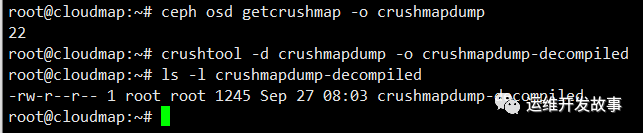

2.4 删除 OSD

最后执行 purge 命令,将该 osd 从 CRUSH map 中彻底删掉,至此,单个 OSD 的删除终于完成了。

$ ceph osd purge 0 --yes-i-really-mean-it

purged osd.0

对了,最后,如果 /etc/ceph/ceph.conf 中由对应的该 osd 的信息,记得要一起删除。