By Nick Triller, Alibaba Cloud Tech Share Author

Offsite backups are an important security measure. They allow restoring data in case of hardware failure, accidental deletion, or any other catastrophic event. Automating backups improves the reliability of the backup process and ensures recent data gets backed up regularly.

We will use the Alibaba Cloud Object Storage Service (OSS) as an offsite backup storage solution. Data will be backed up by a simple bash script which gets executed regularly by a cronjob. Minio Client will be used to transfer the backups to OSS.

Alibaba Cloud OSS is a suitable backup storage solution. Rarely accessed objects such as backups can be stored reliably, cheaply and securely. The first 5 GB of storage can be used completely free of charge.

Prerequisites

Make sure you have an Alibaba Cloud account before you start. Sign up now to receive $ 300 in free credit. Create an ECS server or connect to an existing one with SSH to follow along. All you need is a Linux-based system.

Step 0 – Add a New User (Optional)

It is a good idea to use an account with limited system access for the execution of the backup script. Doing so increases security because an attacker will have limited possibilities if the user which executes the backup script gets compromised. Run the following command to add a new user with the name backup.

sudo adduser backup

Limit the new user's system rights as much as possible without restricting it's ability to upload backups to OSS.

Step 1 – Create OSS Bucket

Next, we will create an OSS bucket for the backups. A bucket is a namespace for a collection of objects. Each object is uniquely identified by the bucket name and the object key.

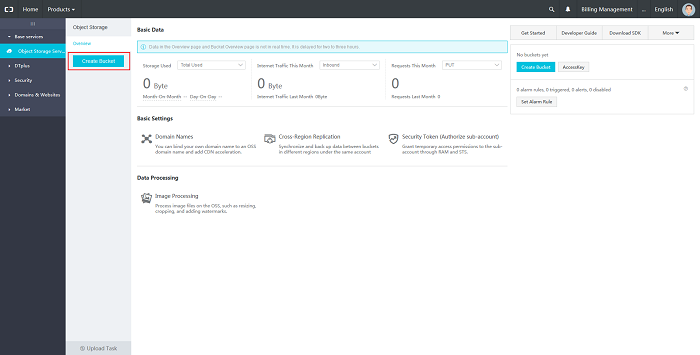

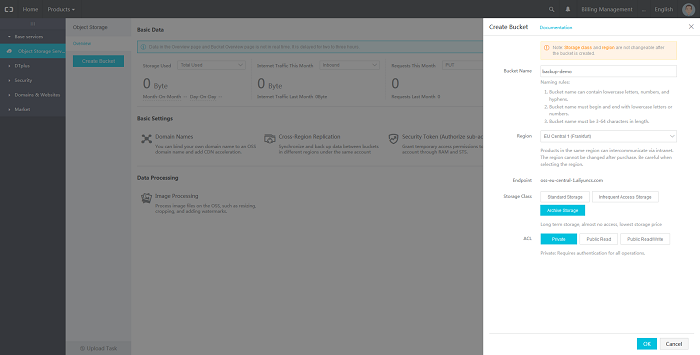

First, navigate to the Alibaba Cloud Console. Open the Products dropdown from the main navigation bar and click on the Object Storage Service link in the Storage & CDN section. You will be asked to accept the OSS Terms of Service if you have not used OSS before. Click on Create Bucket to create a bucket for the backup objects.

Enter the bucket's name, choose a region, pick a storage class and set the access control restrictions. We will use the bucket name backup-demo and the region EU Central 1.

The three available storage classes offer the same data reliability and service availability guarantees.

They mainly differ in their data access features and pricing. Infrequent Access storage offers real time access and lower storage fees compared to Standard storage. At least 30 days of storage are billed for any object, even if the object is deleted shortly after creation. The minimum billable size of objects is 128 KB. Archive storage offers even lower storage fees but data must be unfrozen to become readable, which takes one minute. The minimum billable storage duration is 60 days and the minimum billable size of objects is 128 KB.

Considering the properties of the different storage classes, Infrequent Access storage will be the best class for most backup use cases. It combines real-time access and lower storage fees compared to Standard storage. More information about the storage classes can be found here.

The ACL should be Private for backups as backups should only be written and read by authenticated systems. Please note that the selected storage class and region cannot be changed after the bucket is created. Click on Ok to create the bucket.

Step 2 – Create Access Key

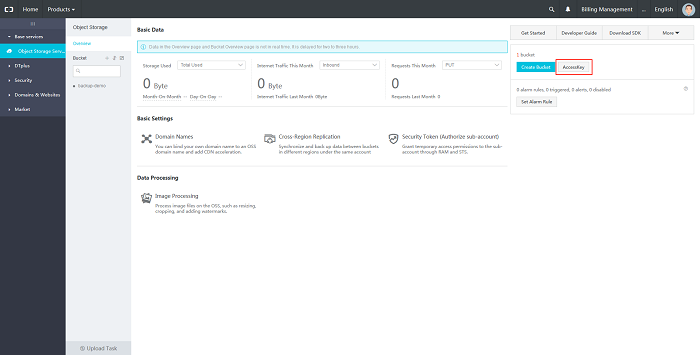

Next, we will create access keys which will be used by our backup script to authenticate with OSS. Click on the AccessKey button on the OSS dashboard page.

You will be asked if you want to use Sub User's AccessKey. Sub User's AccessKeys are more secure because their permissions can be locked down. Click on Get Started with Sub User's AccessKey to be redirected to the Resource Access Management (RAM) dashboard. You will be asked to accept the RAM Terms of Service if you have not used RAM before.

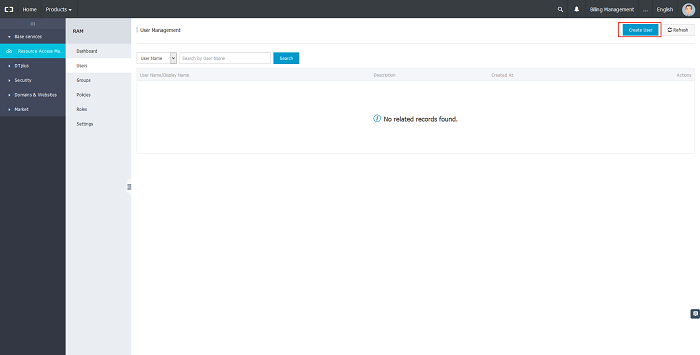

Next, click on Users in the sidebar. The following screen lists every sub user. Create a new sub user by clicking on the Create User button.

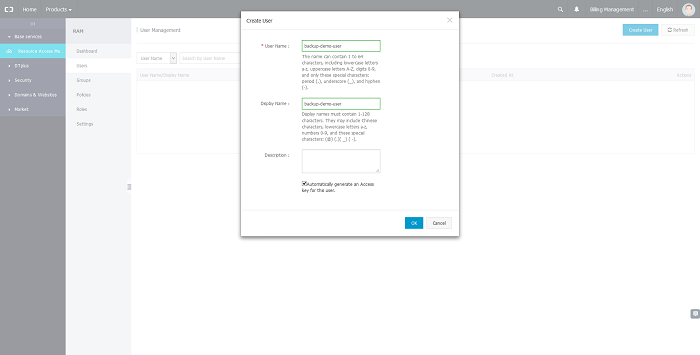

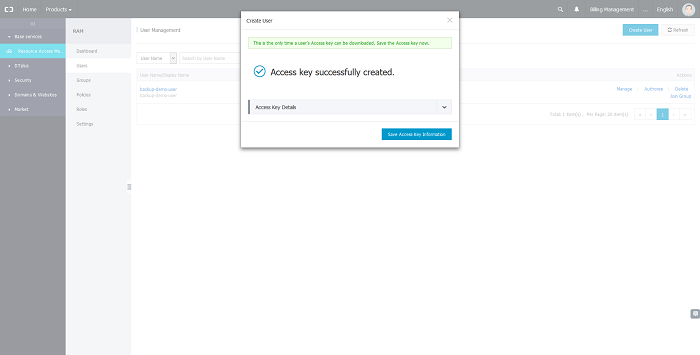

Next, enter the new user's data. We will choose the user name backup-demo-user. All other fields are optional Mark the Automatically generate Access key for this user checkbox. Press OK to create the user.

You will be prompted to save the user's access key to your computer. The access key cannot be retrieved afterwards! Make sure to keep it secure.

Next, we will configure the permissions that are associated with the access key. We will pick the AliyunOSSFullAccess authorization policy. Therefore, anyone authenticated with this access key will be able to manage OSS. Keep in mind that policies can be edited to reduce access rights even further to single buckets or groups of buckets.

Step 3 – Setup Minio Client

Minio Client provides a modern alternative to UNIX commands such as ls, cat and cp. We will use it because it does not only support filesystems but also S3 compatible cloud storage like Alibaba Cloud OSS.

You can use the Minio Client Docker image if you prefer. Refer to minio/mc on DockerHub for instructions.

Download the Minio Client executable with wget. Make sure to pick the correct download link from https://dl.minio.io/client/mc/release/ if you do not use linux-amd64.

cd ~

wget https://dl.minio.io/client/mc/release/linux-amd64/mcNext, set the executable bit with the chmod command to allow executing the file.

chmod +x mc

Move the executable to a directory which is listed in the PATH environment variable. Doing so will enable running the executable from any directory without having to provide its full path. The /usr/bin directory can be used for this purpose on many common Linux distributions such as Ubuntu. The executable will be available to all users on the system.

sudo mv ./mc /usr/bin/mc

Run mc version to check if you can run Minio Client.

Step 4 – Configure Minio Client for Usage with Alibaba Cloud OSS

Next, we will configure Minio Client for usage with Alibaba Cloud OSS. Run the following command after replacing the placeholders , and with the corresponding values from steps 1 and 2. The can be found on the OSS bucket dashboard. Use the Internet Access endpoint if you are not sure which endpoint to choose. All necessary Minio Client configuration files will be automatically created if they are missing.

mc config host add oss <OSS_Endpoint> <OSS_ACCESS_KEY> <OSS_SECRET_KEY>

List all objects in the bucket created in step 1 to check if the configuration works.

mc ls oss/backup-demo

You should not see any output as the bucket does not contain any objects. Check the configuration file at ~/.mc/config.json if an error message is displayed.

Step 5 – Create a Backup Script

Next, we will create a script which backups all files in a directory to OSS. The backup data could be a database dump or any other data. In reality, the backup data would be generated regularly by another script. We will manually place a few text files for this demonstration. Consider using tar to package a directory as a single file if you want to backup a directory with multiple files as one object.

Use a text editor of your choice to create a file named backup.sh in your home directory. Copy the following script into the file.

#!/usr/bin/env bash

SOURCE=$1

DESTINATION=$2

mc cp --json --recursive $SOURCE $DESTINATIONThe first line is called a shebang and makes sure the script will be executed with bash. Afterwards, we assign the first parameter to a variable named SOURCE and the second parameter to a variable called DESTINATION. The second line uses Minio Client to recursively copy all files and directories from SOURCE into DESTINATION. SOURCE and DESTINATION can both refer to a filesystem or cloud storage. The file names will be used as object keys. The –-json option enables structured output as JSON.

Any files which are already present in the OSS bucket will be skipped. Therefore, you should make sure not to reuse filenames. The easiest method to make sure all file names are unique is to include a timestamp in the file names.

Step 4 – Schedule Regular Script Execution with Crontab

Next, we will use a cronjob to execute the backup script regularly. First, use chmod to set the executable bit on the backup.sh script. This will allow file execution.

chmod +x ~/backup.sh

Next run crontab -e to configure a cronjob. You will be asked to choose an editor if this is the first time you use crontab:

no crontab for nick - using an empty one

Select an editor. To change later, run 'select-editor'.

1. /bin/ed

2. /bin/nano <---- easiest

3. /usr/bin/vim.basic

4. /usr/bin/vim.tiny

Choose 1-4 [2]:Press enter to choose nano (default). Add the following line to the end of the file.

* * * * * ~/backup.sh ~/backup-demo/* oss/backup-demo

The SOURCE argument points to the files in the ~/backup-demo directory, while the DESTINATION parameter points to the OSS bucket we created earlier. The backup script will be executed once per minute. Save the file.

Let's check if everything works as expected. Create the backup directory and a file in the backup directory:

mkdir ~/backup-demo

echo test1 > ~/backup-demo/test1.txtThe file should appear in your OSS bucket after up to a minute. Check the bucket with mc ls oss/backup-demo or open the bucket dashboard in the Alibaba Cloud console.

Check the crontab output if the files do not appear in the OSS bucket. By default, the cronjobs get logged to /var/log/syslog.

Further Considerations

- It makes sense to compress your backups if you need to backup a large amount of data.

- You should make sure to monitor any automated backups. This will enable you to fix any problems as they come up and make sure your data is backed up regularly.

- A backup that cannot be restored is useless. You should make sure your backups and backup procedures work as expected by testing them regularly.

Conclusion

In this Tech Share article, we explored how backups can be automatically uploaded to OSS. We setup a new OSS bucket, created access keys, setup and configured Minio Client for the file transfer to OSS, created a backup script and scheduled the backup script execution with a cronjob. Leave a comment if you have any questions!