build cube任务过程中,执行到step 10任务失败,原因是配置kylin的执行MR任务的资源不足

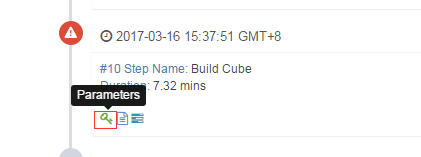

任务进行到第十步骤,失败。具体原因查找

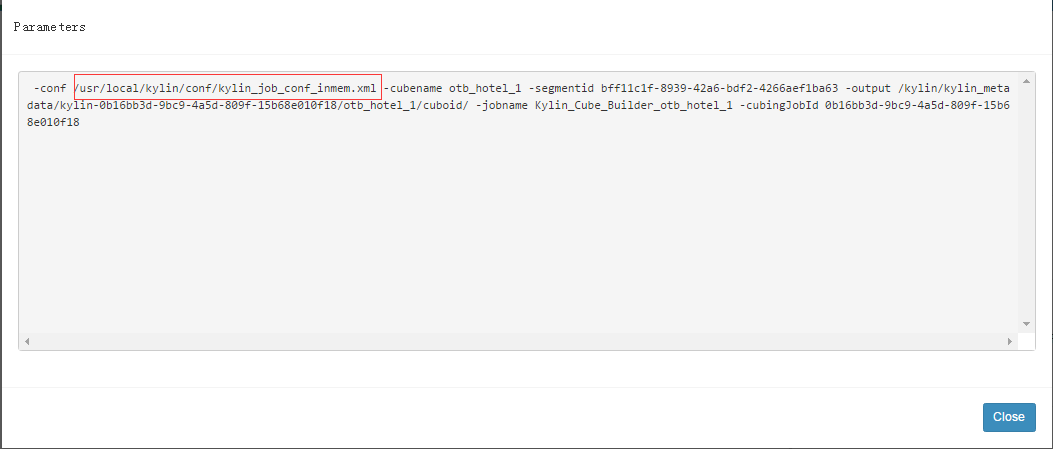

1、查看Parameters

2、查看MRJob日志,发现问题所在

2017-03-16 15:42:43,241 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1484048632162_72188_r_000001_2: Container [pid=11851,containerID=container_1484048632162_72188_01_000170] is running beyond physical memory limits. Current usage: 1.1 GB of 1 GB physical memory used; 2.8 GB of 2.1 GB virtual memory used. Killing container. Dump of the process-tree for container_1484048632162_72188_01_000170 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 11859 11851 11851 11851 (java) 20435 2125 2883371008 280614 /usr/local/jdk/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx768m -Djava.io.tmpdir=/hadoop/2/yarn/local/usercache/kylin/appcache/application_1484048632162_72188/container_1484048632162_72188_01_000170/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/hadoop/6/yarn/logs/application_1484048632162_72188/container_1484048632162_72188_01_000170 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 10.10.16.21 41607 attempt_1484048632162_72188_r_000001_2 170 |- 11851 11849 11851 11851 (bash) 0 0 108625920 341 /bin/bash -c /usr/local/jdk/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx768m -Djava.io.tmpdir=/hadoop/2/yarn/local/usercache/kylin/appcache/application_1484048632162_72188/container_1484048632162_72188_01_000170/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/hadoop/6/yarn/logs/application_1484048632162_72188/container_1484048632162_72188_01_000170 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 10.10.16.21 41607 attempt_1484048632162_72188_r_000001_2 170 1>/hadoop/6/yarn/logs/application_1484048632162_72188/container_1484048632162_72188_01_000170/stdout 2>/hadoop/6/yarn/logs/application_1484048632162_72188/container_1484048632162_72188_01_000170/stderr Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143

3、修改配置文件kylin_job_conf_inmem.xml,添加

|

1

2

3

4

5

6

7

8

9

10

11

|

<!-- reduce -->

<

property

>

<

name

>mapreduce.reduce.memory.mb</

name

>

<

value

>3096</

value

>

<

description

>每个Reduce Task需要的内存量</

description

>

</

property

>

<

property

>

<

name

>mapreduce.reduce.java.opts</

name

>

<

value

>-Xmx3096m</

value

>

<

description

>reduce任务内存</

description

>

</

property

>

|