How Many Non-Persistent Connections Can Nginx/Tengine Support Concurrently

来源 http://jaseywang.me/2015/06/04/how-many-non-persistent-connections-can-nginxtengine-support-concurrently/

碰巧看到一篇文章https://blog.cloudflare.com/how-to-receive-a-million-packets/

比这篇还前,厉害了

我总结用到的知识点

-

Fortunately, these Nginx servers all ship with 10G network cards, with mode 4 bonding, some link layer and lower level are also pre-optimizated like TSO/GSO/GRO/LRO, ring buffer size

-

TCP accept queue

-

CPU and memory

-

the networking goes wild cuz packets(包转发率)

-

interrupts

-

drop/overrun

-

tcpdump incoming packagea are less than 75Byte

-

the 3-way handshake TCP sengment HTTP POST request, and exist with 4-way handshake

-

resend the same requests every 10 minutes or longer,

-

More than 80% of the traffic are purely small TCP packages, which will have significant impact on network card and CPU

-

quite expensive to set up a TCP connection

-

the new request connected per second is qute small compare to the normal

需要12个知识点

包转发率

packet size barckets for interface bond0

参考 http://www.opscoder.info/receive_packets.html

结论

总之,如果你想要一个完美的性能,你需要做下面这些:

确保流量均匀分布在许多RX队列和SO_REUSEPORT进程上。在实践中,只要有大量的连接(或流动),负载通常是分布式的。

需要有足够的CPU容量去从内核上获取数据包。

为了使事情更加稳定,RX队列和接收进程都应该在单个NUMA节点上。

RX队列和接收进程

(ethtool -S eth2 |grep rx watch 'netstat -s --udp' )

packet size(bytes) count packet size(bytes) count

反推

what导致TCP accept queue,nginx处理的能力问题

情况

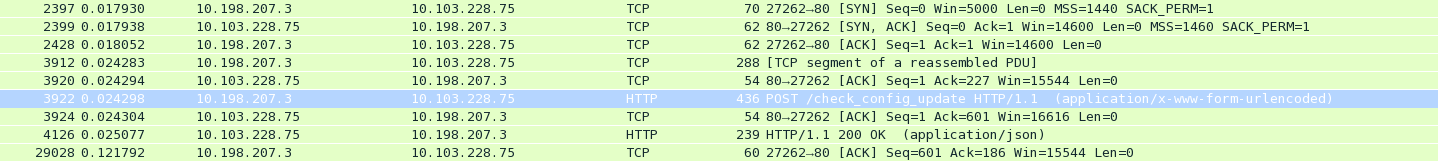

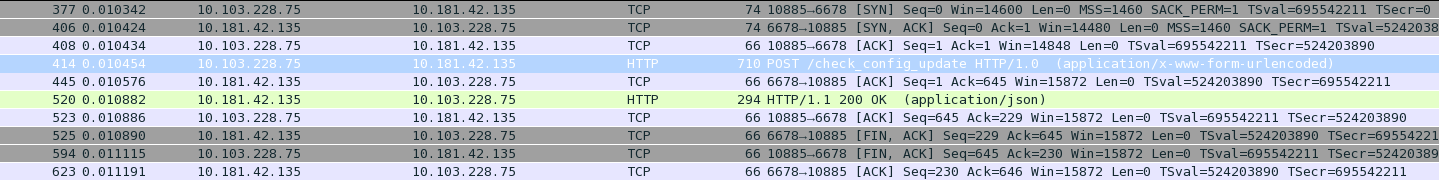

a client packet's lifetime

The first one is the packet capture between load balance IPVS and Nginx, the second one is the communications between Nginx and upstream server

发现the 3-way handshake TCP sengment HTTP POST request, and exist with 4-way handshake

最后的解决办法

Nginx will becomes bottleneck one day,

I need to run some real traffic benchmarks to get the accurate result.

recovering the product

加到9台后

Each Nginx gets about 10K qps, with 300ms response time, zero TCP queue, and 10% CPU util.

测试

The benchmark process is not complex, remove one Nginx server(real server) from IPVS at a time, and monitor its metrics like qps, response time, TCP queue, and CPU/memory/networking/disk utils. When qps or similar metric don't goes up anymore and begins to turn round, that's usually the maximum power the server can support.

1.Before kicking off, make sure some key parameters or directives are setting correct, including Nginx worker_processes/worker_connections, CPU affinity to distrubute interrupts, and kernel parameter(tcp_max_syn_backlog/file-max/netdev_max_backlog/somaxconn, etc.).

2.Keep an eye on the rmem_max/wmem_max, during my benchmark, I notice quite different results with different values.

3.Turning off your access log to cut down the IO and timestamps in TCP stack may achieve better performance, I haven't tested.

4. In this case, you can decrease TCP keepalive parameter to workround.

测试结论

Here are the results:

The best performance for a single server is 25K qps, however during that period, it's not so stable, I observe a almost full queue size in TCP and some connection failures during requesting the URI, except that, everything seems normal. A conservative value is about 23K qps. That comes with 300K TCP established connections, 200Kpps, 500K interrupts and 40% CPU util.

During that time, the total resource consumed from the IPVS perspective is 900K current connection, 600Kpps, 800Mbps, and 100K qps.

The above benchmark was tested during 10:30PM ~ 11:30PM, the peak time usually falls between 10:00PM to 10:30PM.

单个服务器的最佳性能是25K qps,但是在这段时间内,它并不那么稳定,在TCP请求时,我观察到几乎完全的TCP队列大小以及一些连接失败,除了这个,其他似乎都是正常的。 保守值约为23K qps。 这与300K TCP建立连接,200Kpps,500K中断和40%CPU util。

在此期间,从IPVS角度看,消耗的资源总量为900K当前连接,600Kpps,800Mbps和100K qps。

上述基准测试在10:30 PM11:30 PM进行测试,高峰时间通常在下午10:00至10:30之间。

本文转自 liqius 51CTO博客,原文链接:http://blog.51cto.com/szgb17/1927566,如需转载请自行联系原作者

![Nginx - 配置 SSL 报错 nginx: [emerg] unknown directive “ssl“](https://ucc.alicdn.com/images/user-upload-01/20190905082549139.png?x-oss-process=image/resize,h_160,m_lfit)