本次使用环境centos7,

共3台服务器,基础配置采用4核4G内存200G机械硬盘

在初装系统机器中开始进行以下操作

使用root账号开始进行以下操作

目前安装的xl兼容版本为postgresql10版本。

第一步:所有集群中的服务器均进行初始修改/etc/hosts,增加内容如下:

10.228.0.45 gtm

10.228.0.46 datanode1

10.228.0.47 datanode2第二步关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service第三步 关闭selinux

vim /etc/selinux/configSELINUX=disabled第四步基础依赖包安装

yum install -y flex bison readline-devel zlib-devel openjade docbook-style-dsssl git gcc第五步重启使其之前配置生效

reboot第六步: 创建postgres用户

useradd postgres

passwd postgres第七步:设置ssh免密

su - postgres

mkdir ~/.ssh

chmod 700 ~/.ssh

ssh-keygen -t rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys第八步:将gtm节点的ssh免密文件复制到datanode存储节点

scp ~/.ssh/authorized_keys postgres@datanode1:~/.ssh/

scp ~/.ssh/authorized_keys postgres@datanode2:~/.ssh/第九步:开始安装postgresql-xl

su root

cd /opt

git clone git://git.postgresql.org/git/postgres-xl.git

cd postgres-xl/

./configure --prefix=/home/postgres/pgxl/

make

make install

cd contrib

make

make install第十步:配置基础环境变量

su - postgres

vim .bashrc文件最后增加以下内容:

export PGHOME=/home/postgres/pgxl

export LD_LIBRARY_PATH=$PGHOME/lib:$LD_LIBRARY_PATH

export PATH=$PGHOME/bin:$PATH第十一步:使环境变量生效并测试是否配置成功

source .bashrc

echo $PGHOME基础环境安装完成,以下步骤为集群配置

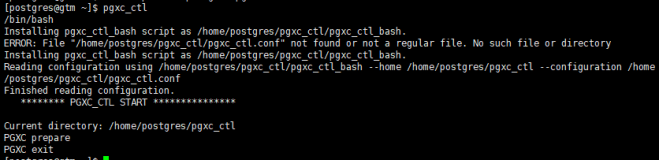

第一步:在postgres用户跟目录下生成pgxc_ctl配置文件

pgxc_ctl

PGXC prepare

PGXG exit

第二步:修改pgxc_ctl.conf文件配置

#!/usr/bin/env bash

#

# Postgres-XC Configuration file for pgxc_ctl utility.

#

# Configuration file can be specified as -c option from pgxc_ctl command. Default is

# $PGXC_CTL_HOME/pgxc_ctl.org.

#

# This is bash script so you can make any addition for your convenience to configure

# your Postgres-XC cluster.

#

# Please understand that pgxc_ctl provides only a subset of configuration which pgxc_ctl

# provide. Here's several several assumptions/restrictions pgxc_ctl depends on.

#

# 1) All the resources of pgxc nodes has to be owned by the same user. Same user means

# user with the same user name. User ID may be different from server to server.

# This must be specified as a variable $pgxcOwner.

#

# 2) All the servers must be reacheable via ssh without password. It is highly recommended

# to setup key-based authentication among all the servers.

#

# 3) All the databases in coordinator/datanode has at least one same superuser. Pgxc_ctl

# uses this user to connect to coordinators and datanodes. Again, no password should

# be used to connect. You have many options to do this, pg_hba.conf, pg_ident.conf and

# others. Pgxc_ctl provides a way to configure pg_hba.conf but not pg_ident.conf. This

# will be implemented in the later releases.

#

# 4) Gtm master and slave can have different port to listen, while coordinator and datanode

# slave should be assigned the same port number as master.

#

# 5) Port nuber of a coordinator slave must be the same as its master.

#

# 6) Master and slave are connected using synchronous replication. Asynchronous replication

# have slight (almost none) chance to bring total cluster into inconsistent state.

# This chance is very low and may be negligible. Support of asynchronous replication

# may be supported in the later release.

#

# 7) Each coordinator and datanode can have only one slave each. Cascaded replication and

# multiple slave are not supported in the current pgxc_ctl.

#

# 8) Killing nodes may end up with IPC resource leak, such as semafor and shared memory.

# Only listening port (socket) will be cleaned with clean command.

#

# 9) Backup and restore are not supported in pgxc_ctl at present. This is a big task and

# may need considerable resource.

#

#========================================================================================

#

#

# pgxcInstallDir variable is needed if you invoke "deploy" command from pgxc_ctl utility.

# If don't you don't need this variable.

pgxcInstallDir=$HOME/pgxc

#---- OVERALL -----------------------------------------------------------------------------

#

pgxcOwner=$USER # owner of the Postgres-XC databaseo cluster. Here, we use this

# both as linus user and database user. This must be

# the super user of each coordinator and datanode.

pgxcUser=$pgxcOwner # OS user of Postgres-XC owner

tmpDir=/tmp # temporary dir used in XC servers

localTmpDir=$tmpDir # temporary dir used here locally

configBackup=n # If you want config file backup, specify y to this value.

configBackupHost=pgxc-linker # host to backup config file

configBackupDir=$HOME/pgxc # Backup directory

configBackupFile=pgxc_ctl.bak # Backup file name --> Need to synchronize when original changed.

#---- GTM ------------------------------------------------------------------------------------

# GTM is mandatory. You must have at least (and only) one GTM master in your Postgres-XC cluster.

# If GTM crashes and you need to reconfigure it, you can do it by pgxc_update_gtm command to update

# GTM master with others. Of course, we provide pgxc_remove_gtm command to remove it. This command

# will not stop the current GTM. It is up to the operator.

#---- GTM Master -----------------------------------------------

#---- Overall ----

gtmName=gtm

gtmMasterServer=gtm

gtmMasterPort=20001

gtmMasterDir=$HOME/pgxc/nodes/gtm

#---- Configuration ---

gtmExtraConfig=none # Will be added gtm.conf for both Master and Slave (done at initilization only)

gtmMasterSpecificExtraConfig=none # Will be added to Master's gtm.conf (done at initialization only)

#---- GTM Slave -----------------------------------------------

# Because GTM is a key component to maintain database consistency, you may want to configure GTM slave

# for backup.

#---- Overall ------

gtmSlave=n # Specify y if you configure GTM Slave. Otherwise, GTM slave will not be configured and

# all the following variables will be reset.

#gtmSlaveName=gtmSlave

#gtmSlaveServer=node12 # value none means GTM slave is not available. Give none if you don't configure GTM Slave.

#gtmSlavePort=20001 # Not used if you don't configure GTM slave.

#gtmSlaveDir=$HOME/pgxc/nodes/gtm # Not used if you don't configure GTM slave.

# Please note that when you have GTM failover, then there will be no slave available until you configure the slave

# again. (pgxc_add_gtm_slave function will handle it)

#---- Configuration ----

#gtmSlaveSpecificExtraConfig=none # Will be added to Slave's gtm.conf (done at initialization only)

#---- GTM Proxy -------------------------------------------------------------------------------------------------------

# GTM proxy will be selected based upon which server each component runs on.

# When fails over to the slave, the slave inherits its master's gtm proxy. It should be

# reconfigured based upon the new location.

#

# To do so, slave should be restarted. So pg_ctl promote -> (edit postgresql.conf and recovery.conf) -> pg_ctl restart

#

# You don't have to configure GTM Proxy if you dont' configure GTM slave or you are happy if every component connects

# to GTM Master directly. If you configure GTL slave, you must configure GTM proxy too.

#---- Shortcuts ------

gtmProxyDir=$HOME/pgxc/nodes/gtm_pxy

#---- Overall -------

gtmProxy=y # Specify y if you conifugre at least one GTM proxy. You may not configure gtm proxies

# only when you dont' configure GTM slaves.

# If you specify this value not to y, the following parameters will be set to default empty values.

# If we find there're no valid Proxy server names (means, every servers are specified

# as none), then gtmProxy value will be set to "n" and all the entries will be set to

# empty values.

gtmProxyNames=(gtm_pxy1 gtm_pxy2) # No used if it is not configured

gtmProxyServers=(datanode1 datanode2) # Specify none if you dont' configure it.

gtmProxyPorts=(20001 20001) # Not used if it is not configured.

gtmProxyDirs=($gtmProxyDir $gtmProxyDir) # Not used if it is not configured.

#---- Configuration ----

gtmPxyExtraConfig=none # Extra configuration parameter for gtm_proxy. Coordinator section has an example.

gtmPxySpecificExtraConfig=(none none)

#---- Coordinators ----------------------------------------------------------------------------------------------------

#---- shortcuts ----------

coordMasterDir=$HOME/pgxc/nodes/coord

coordSlaveDir=$HOME/pgxc/nodes/coord_slave

coordArchLogDir=$HOME/pgxc/nodes/coord_archlog

#---- Overall ------------

coordNames=(coord1) # Master and slave use the same name

coordPorts=(20004) # Master ports

poolerPorts=(20010) # Master pooler ports

coordPgHbaEntries=(10.228.0.0/25) # Assumes that all the coordinator (master/slave) accepts

# the same connection

# This entry allows only $pgxcOwner to connect.

# If you'd like to setup another connection, you should

# supply these entries through files specified below.

# Note: The above parameter is extracted as "host all all 0.0.0.0/0 trust". If you don't want

# such setups, specify the value () to this variable and suplly what you want using coordExtraPgHba

# and/or coordSpecificExtraPgHba variables.

#coordPgHbaEntries=(::1/128) # Same as above but for IPv6 addresses

#---- Master -------------

coordMasterServers=(gtm) # none means this master is not available

coordMasterDirs=($coordMasterDir)

coordMaxWALsernder=5 # max_wal_senders: needed to configure slave. If zero value is specified,

# it is expected to supply this parameter explicitly by external files

# specified in the following. If you don't configure slaves, leave this value to zero.

coordMaxWALSenders=($coordMaxWALsernder)

# max_wal_senders configuration for each coordinator.

#---- Slave -------------

coordSlave=n # Specify y if you configure at least one coordiantor slave. Otherwise, the following

# configuration parameters will be set to empty values.

# If no effective server names are found (that is, every servers are specified as none),

# then coordSlave value will be set to n and all the following values will be set to

# empty values.

coordSlaveSync=y # Specify to connect with synchronized mode.

coordSlaveServers=(datanode1 datanode2) # none means this slave is not available

coordSlavePorts=(20004 20005 20004 20005) # Master ports

coordSlavePoolerPorts=(20010 20011 20010 20011) # Master pooler ports

coordSlaveDirs=($coordSlaveDir $coordSlaveDir)

coordArchLogDirs=($coordArchLogDir $coordArchLogDir)

#---- Configuration files---

# Need these when you'd like setup specific non-default configuration

# These files will go to corresponding files for the master.

# You may supply your bash script to setup extra config lines and extra pg_hba.conf entries

# Or you may supply these files manually.

coordExtraConfig=coordExtraConfig # Extra configuration file for coordinators.

# This file will be added to all the coordinators'

# postgresql.conf

# Pleae note that the following sets up minimum parameters which you may want to change.

# You can put your postgresql.conf lines here.

cat > $coordExtraConfig <<EOF

#================================================

# Added to all the coordinator postgresql.conf

# Original: $coordExtraConfig

log_destination = 'stderr'

logging_collector = on

log_directory = 'pg_log'

listen_addresses = '*'

max_connections = 100

EOF

# Additional Configuration file for specific coordinator master.

# You can define each setting by similar means as above.

coordSpecificExtraConfig=(none none)

coordExtraPgHba=none # Extra entry for pg_hba.conf. This file will be added to all the coordinators' pg_hba.conf

coordSpecificExtraPgHba=(none none)

#----- Additional Slaves -----

#

# Please note that this section is just a suggestion how we extend the configuration for

# multiple and cascaded replication. They're not used in the current version.

#

coordAdditionalSlaves=n # Additional slave can be specified as follows: where you

coordAdditionalSlaveSet=(cad1) # Each specifies set of slaves. This case, two set of slaves are

# configured

cad1_Sync=n # All the slaves at "cad1" are connected with asynchronous mode.

# If not, specify "y"

# The following lines specifies detailed configuration for each

# slave tag, cad1. You can define cad2 similarly.

cad1_Servers=(datanode1 datanode2) # Hosts

cad1_dir=$HOME/pgxc/nodes/coord_slave_cad1

cad1_Dirs=($cad1_dir $cad1_dir)

cad1_ArchLogDir=$HOME/pgxc/nodes/coord_archlog_cad1

cad1_ArchLogDirs=($cad1_ArchLogDir $cad1_ArchLogDir)

#---- Datanodes -------------------------------------------------------------------------------------------------------

#---- Shortcuts --------------

datanodeMasterDir=$HOME/pgxc/nodes/dn_master

datanodeSlaveDir=$HOME/pgxc/nodes/dn_slave

datanodeArchLogDir=$HOME/pgxc/nodes/datanode_archlog

#---- Overall ---------------

#primaryDatanode=datanode1 # Primary Node.

# At present, xc has a priblem to issue ALTER NODE against the primay node. Until it is fixed, the test will be done

# without this feature.

primaryDatanode=datanode1 # Primary Node.

datanodeNames=(datanode1 datanode2)

datanodePorts=(20008 20008) # Master ports

datanodePoolerPorts=(20012 20012) # Master pooler ports

datanodePgHbaEntries=(10.228.0.0/25) # Assumes that all the coordinator (master/slave) accepts

# the same connection

# This list sets up pg_hba.conf for $pgxcOwner user.

# If you'd like to setup other entries, supply them

# through extra configuration files specified below.

# Note: The above parameter is extracted as "host all all 0.0.0.0/0 trust". If you don't want

# such setups, specify the value () to this variable and suplly what you want using datanodeExtraPgHba

# and/or datanodeSpecificExtraPgHba variables.

#datanodePgHbaEntries=(::1/128) # Same as above but for IPv6 addresses

#---- Master ----------------

datanodeMasterServers=(datanode1 datanode2) # none means this master is not available.

# This means that there should be the master but is down.

# The cluster is not operational until the master is

# recovered and ready to run.

datanodeMasterDirs=($datanodeMasterDir $datanodeMasterDir)

datanodeMaxWalSender=5 # max_wal_senders: needed to configure slave. If zero value is

# specified, it is expected this parameter is explicitly supplied

# by external configuration files.

# If you don't configure slaves, leave this value zero.

datanodeMaxWALSenders=($datanodeMaxWalSender $datanodeMaxWalSender)

# max_wal_senders configuration for each datanode

#---- Slave -----------------

datanodeSlave=n # Specify y if you configure at least one coordiantor slave. Otherwise, the following

# configuration parameters will be set to empty values.

# If no effective server names are found (that is, every servers are specified as none),

# then datanodeSlave value will be set to n and all the following values will be set to

# empty values.

datanodeSlaveServers=(datanode1 datanode2) # value none means this slave is not available

datanodeSlavePorts=(20008 20008) # value none means this slave is not available

datanodeSlavePoolerPorts=(20012 20012) # value none means this slave is not available

datanodeSlaveSync=y # If datanode slave is connected in synchronized mode

datanodeSlaveDirs=($datanodeSlaveDir $datanodeSlaveDir)

datanodeArchLogDirs=( $datanodeArchLogDir $datanodeArchLogDir)

# ---- Configuration files ---

# You may supply your bash script to setup extra config lines and extra pg_hba.conf entries here.

# These files will go to corresponding files for the master.

# Or you may supply these files manually.

datanodeExtraConfig=none # Extra configuration file for datanodes. This file will be added to all the

# datanodes' postgresql.conf

datanodeSpecificExtraConfig=(none none)

datanodeExtraPgHba=none # Extra entry for pg_hba.conf. This file will be added to all the datanodes' postgresql.conf

datanodeSpecificExtraPgHba=(none none)

#----- Additional Slaves -----

datanodeAdditionalSlaves=n # Additional slave can be specified as follows: where you

# datanodeAdditionalSlaveSet=(dad1 dad2) # Each specifies set of slaves. This case, two set of slaves are

# configured

# dad1_Sync=n # All the slaves at "cad1" are connected with asynchronous mode.

# If not, specify "y"

# The following lines specifies detailed configuration for each

# slave tag, cad1. You can define cad2 similarly.

# dad1_Servers=(node08 node09 node06 node07) # Hosts

# dad1_dir=$HOME/pgxc/nodes/coord_slave_cad1

# dad1_Dirs=($cad1_dir $cad1_dir $cad1_dir $cad1_dir)

# dad1_ArchLogDir=$HOME/pgxc/nodes/coord_archlog_cad1

# dad1_ArchLogDirs=($cad1_ArchLogDir $cad1_ArchLogDir $cad1_ArchLogDir $cad1_ArchLogDir)

#---- WAL archives -------------------------------------------------------------------------------------------------

walArchive=n # If you'd like to configure WAL archive, edit this section.

# Pgxc_ctl assumes that if you configure WAL archive, you configure it

# for all the coordinators and datanodes.

# Default is "no". Please specify "y" here to turn it on.

#

# End of Configuration Section

#

#==========================================================================================================================

#========================================================================================================================

# The following is for extension. Just demonstrate how to write such extension. There's no code

# which takes care of them so please ignore the following lines. They are simply ignored by pgxc_ctl.

# No side effects.

#=============<< Beginning of future extension demonistration >> ========================================================

# You can setup more than one backup set for various purposes, such as disaster recovery.

walArchiveSet=(war1 war2)

war1_source=(master) # you can specify master, slave or ano other additional slaves as a source of WAL archive.

# Default is the master

wal1_source=(slave)

wal1_source=(additiona_coordinator_slave_set additional_datanode_slave_set)

war1_host=node10 # All the nodes are backed up at the same host for a given archive set

war1_backupdir=$HOME/pgxc/backup_war1

wal2_source=(master)

war2_host=node11

war2_backupdir=$HOME/pgxc/backup_war2

#=============<< End of future extension demonistration >> ========================================================

第三步:执行初始化

pgxc_ctl -c /home/postgres/pgxc_ctl/pgxc_ctl.conf init all 第四步:启动集群

默认初始化完成后已经启动了集群了,平常进行开启关闭使用以下语句

pgxc_ctl -c /home/postgres/pgxc_ctl/pgxc_ctl.conf start allpgxc_ctl -c /home/postgres/pgxc_ctl/pgxc_ctl.conf stop all 第五步:进入psql

psql -p 20004进入其他远程节点

psql -h datanode2 -p 20008 -U postgres查询集群状态

select * from pgxc_node;