阿里云开发者社区

大家在互动

大家在关注

综合

最新

有奖励

免费用

让你的文档从静态展示到一键部署可操作验证

通过函数计算的能力让阿里云的文档从静态展示升级为动态可操作验证,用户在文档中单击一键部署可快速完成代码的部署及测试。这一改变已在函数计算的活动沙龙中得到用户的认可。

一键生成视频!用 PAI-EAS 部署 AI 视频生成模型 SVD 工作流

本教程将带领大家免费领取阿里云PAI-EAS的免费试用资源,并且带领大家在 ComfyUI 环境下使用 SVD的模型,根据任何图片生成一个小短视频。

倚天使用|YODA倚天应用迁移神器,让跨架构应用迁移变得简单高效

YODA(Yitian Optimal Development Assistant,倚天应用迁移工具)旨在帮助用户更加高效、便捷地实现跨平台、跨结构下的应用迁移,大幅度缩短客户在新平台上端到端性能验

Paimon 与 Spark 的集成(二):查询优化

通过一系列优化,我们将 Paimon x Spark 在 TpcDS 上的性能提高了37+%,已基本和 Parquet x Spark 持平,本文对其中的关键优化点进行了详细介绍。

ECS实例选型最佳实践

本课程主要讲解在客户明确自身业务功能、性能、稳定性需求,以及成本成本约束后去了解各规格族/规格特性,匹配自身需求选择所需服务器类型。实例规格选型最佳实践,就是为了帮助用户结合自身业务需求中性能、价格、

创建to do list应用教程

阿里云讲师手把手带你部署to do list,本实验支持使用 个人账号资源 或 领取免费试用额度 进行操作,建议优先选用通过已领取的云工开物高校计划学生300元优惠券购买个人账号资源的方案,如您具备免

conda数据源在昨天失效返回404,当前依赖的包无法安装和使用

问题描述conda数据源在失效返回404当前依赖的包无法安装和使用失效的镜像通道地址conda-forge: http://mirrors.aliyun.com/anaconda/cloud

阿里云百炼大模型产品实践

Doodle Jump — 使用Flutter&Flame开发游戏真不错!

用Flutter&Flame开发游戏是一种什么体验?最近网上冲浪的时候,我偶然发现了一个国外的游戏网站,类似于国内的4399。在浏览时,我遇到了一款经典的小游戏:Doodle Jump...

PAI-EAS 一键启动ComfyUI!SVD 图片一键生成视频 stable video diffusion 教程 SVD工作流

PAI-EAS 一键启动ComfyUI!SVD 图片一键生成视频 stable video diffusion 教程 SVD工作流

阿里云产品手册2024版

阿里云作为数字经济的重要建设者,不断加深硬核科技实力,通过自身能力助力客户实现高质量发展,共创数字新世界。阿里云产品手册 2024 版含产品大图、关于阿里云、引言、安全合规等内容,覆盖人工智能与机器学

加载ModelScope模型以后,为什么调用,model.chat()会提示错误?

加载ModelScope模型以后为什么调用model.chat()会提示错误AttributeError: Qwen2ForCausalLM object has no attribute chat

更高效准确的数据库内部任务调度实践,阿里云数据库SelectDB 内核 Apache Doris 内置 Job Scheduler 的实现与应用

Apache Doris 2.1 引入了内置的 Job Scheduler,旨在解决依赖外部调度系统的问题,提供秒级精确的定时任务管理。

深入探究Java微服务架构:Spring Cloud概论

**摘要:** 本文深入探讨了Java微服务架构中的Spring Cloud,解释了微服务架构如何解决传统单体架构的局限性,如松耦合、独立部署、可伸缩性和容错性。Spring Cloud作为一个基于

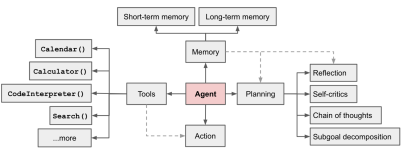

在函数计算上部署专属的Agent平台

Agent及Agent平台的相关概念和应用价值已经在《智能体(Agent)平台介绍》 文章进行了介绍,接下来我们要进行实际的操作,在阿里云函数计算上快速获取专属的Agent平台-AgentCraft

Higress 全新 Wasm 运行时,性能大幅提升

本文介绍 Higress 将 Wasm 插件的运行时从 V8 切换到 WebAssembly Micro Runtime (WAMR) 的最新进展。

云效流水线智能排查功能实测:AI赋能DevOps,精准定位与高效修复实战评测

云效持续集成流水线Flow是阿里云提供的企业级CICD工具,免费且注册即用。它具备高可用性、免运维、深度集成阿里云服务、多样化发布策略及丰富的企业级特性。产品亮点包括智能排查功能,能快速定位问题,提高

【活动推荐】Alibaba Cloud Linux实践操作学习赛,有电子证书及丰厚奖品!

参与开放原子基金会的[龙蜥社区Alibaba Cloud Linux实践操作学习赛](https://competition.atomgit.com/competitionInfo),获取电子证书。报

日志服务 HarmonyOS NEXT 日志采集最佳实践

鸿蒙操作系统(HarmonyOS)上的日志服务(SLS)SDK 提供了针对 IoT、移动端到服务端的全场景日志采集、处理和分析能力,旨在满足万物互联时代下应用的多元化设备接入、高效协同和安全可靠运行的

Higress 基于自定义插件访问 Redis

本文介绍了Higress,一个支持基于WebAssembly (WASM) 的边缘计算网关,它允许用户使用Go、C++或Rust编写插件来扩展其功能。文章特别讨论了如何利用Redis插件实现限流、缓存

第十三期乘风伯乐奖--寻找百位乘风者伯乐,邀请新博主入驻即可获奖

乘风伯乐奖,面向阿里云开发者社区已入驻乘风者计划的博主(技术/星级/专家),邀请用户入驻乘风者计划即可获得乘风者定制周边等实物奖励。本期面向阿里云开发者社区寻找100位乘风伯乐,邀请人数月度TOP 1

号外号外!ClickHouse企业版正式商业化啦!

阿里云将于2024年4月23日14:00举办《ClickHouse企业版商业化发布会》直播,探讨阿里云ClickHouse企业版的架构、功能与优势,以及未来一年的产品规划。直播还将分享ClickHou

开源开发者沙龙北京站 | 微服务安全零信任架构

讲师/嘉宾简介 刘军(陆龟)|Apache Member 江河清(远云)|Apache Dubbo PMC 孙玉梅(玉梅)|阿里云技术专家 季敏(清铭)|Higress Maintainer 丁双喜(

智能体(Agent)平台介绍

2023年11月9日,比尔盖茨先生发布了《人工智能即将彻底改变你使用计算机的方式》文章,详尽阐明了Agent(智能体)这个新一代智能应用的技术理念。在个人助理、卫生保健、教育、生产率、娱乐购物、科技等

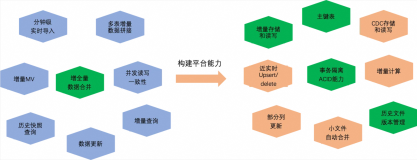

MaxCompute 近实时增全量处理一体化新架构和使用场景介绍

本文主要介绍基于 MaxCompute 的离线近实时一体化新架构如何来支持这些综合的业务场景,提供近实时增全量一体的数据存储和计算(Transaction Table2.0)解决方案。

PolarDB +AnalyticDB Zero-ETL :免费同步数据到ADB,享受数据流通新体验

Zero-ETL是阿里云瑶池数据库提供的服务,旨在简化传统ETL流程的复杂性和成本,提高数据实时性。降低数据同步成本,允许用户快速在AnalyticDB中对PolarDB数据进行分析,降低了30%的数

Flink CDC在阿里云DataWorks数据集成应用实践

本文整理自阿里云 DataWorks 数据集成团队的高级技术专家 王明亚(云时)老师在 Flink Forward Asia 2023 中数据集成专场的分享。

Python自然语言处理面试:NLTK、SpaCy与Hugging Face库详解

【4月更文挑战第16天】本文介绍了Python NLP面试中NLTK、SpaCy和Hugging Face库的常见问题和易错点。通过示例代码展示了如何进行分词、词性标注、命名实体识别、相似度计算、依存

使用EEPROM断电保存数据

本文介绍了Arduino中EEPROM的使用,EEPROM是一种非易失性存储器,用于在断电后保留数据。Arduino的各种控制器如UNO、duemilanove等内置或可外接EEPROM,容量不同。A

Python深度学习面试:CNN、RNN与Transformer详解

【4月更文挑战第16天】本文介绍了深度学习面试中关于CNN、RNN和Transformer的常见问题和易错点,并提供了Python代码示例。理解这三种模型的基本组成、工作原理及其在图像识别、文本处理等

TensorFlow与PyTorch在Python面试中的对比与应用

【4月更文挑战第16天】这篇博客探讨了Python面试中TensorFlow和PyTorch的常见问题,包括框架基础操作、自动求梯度与反向传播、数据加载与预处理。易错点包括混淆框架API、动态图与静态

Alibaba Cloud Linux基础入门(1)——配置zabbix

该文档是关于在Alibaba Cloud Linux上配置Zabbix的教程。首先,通过添加Zabbix仓库并安装相关软件包(如zabbix-server,web前端和agent)。然后,安装并启动M

【微服务系列笔记】Seata

Seata是一种开源的分布式事务解决方案,旨在解决分布式事务管理的挑战。它提供了高性能和高可靠性的分布式事务服务,支持XA、TCC、AT等多种事务模式,并提供了全局唯一的事务ID,以确保事务的一致性和

Python机器学习面试:Scikit-learn基础与实践

【4月更文挑战第16天】本文探讨了Python机器学习面试中Scikit-learn的相关重点,包括数据预处理(特征缩放、缺失值处理、特征选择)、模型训练与评估、超参数调优(网格搜索、随机搜索)以及集

Matplotlib与Seaborn在Python面试中的可视化题目

【4月更文挑战第16天】本文介绍了Python数据可视化在面试中的重点,聚焦于Matplotlib和Seaborn库。通过基础绘图、进阶图表、图形定制和交互式图表的实例展示了常见面试问题,并列出了一些

Pandas在Python面试中的应用与实战演练

【4月更文挑战第16天】本文介绍了Python数据分析库Pandas在面试中的常见问题和易错点,包括DataFrame和Series的创建、数据读写、清洗预处理、查询过滤、聚合分组、数据合并与连接。强

电子好书发您分享《代码管理实践10讲 代码管理实践10讲》

📚 《代码管理实践10讲》电子书推荐!了解并提升代码管理技能,共10个实战篇章,助你优化开发流程。阿里云开发者平台可阅读:[查看详情](https://developer.aliyun.com/eb

电子好书发您分享《PolarDB分布式版架构介绍 PolarDB分布式版架构介绍》

探索阿里云PolarDB分布式版的架构深度解析,该书详述了这款高性能云原生数据库的设计理念与技术特点,包括存储计算分离、水平扩展及分布式事务支持。[阅读电子书](https://developer.a

电子好书发您分享《从零开始玩转AIGC 从零开始玩转AIGC》

探索AIGC世界!《从零开始玩转AIGC》电子书,引领读者踏入AI内容生成的奇妙旅程。[阅读全书](https://developer.aliyun.com/ebook/8330/116541?spm

Python数据分析面试:NumPy基础与应用

【4月更文挑战第16天】了解并熟练运用NumPy是衡量Python数据分析能力的关键。本文探讨了面试中常遇到的NumPy问题,包括数组创建、属性、索引切片、数组运算、统计函数以及重塑和拼接,并提供了相

Python与NoSQL数据库(MongoDB、Redis等)面试问答

【4月更文挑战第16天】本文探讨了Python与NoSQL数据库(如MongoDB、Redis)在面试中的常见问题,包括连接与操作数据库、错误处理、高级特性和缓存策略。重点介绍了使用`pymongo`

Python与MySQL数据库交互:面试实战

【4月更文挑战第16天】本文介绍了Python与MySQL交互的面试重点,包括使用`mysql-connector-python`或`pymysql`连接数据库、执行SQL查询、异常处理、防止SQL注

【MySQL实战笔记】 05 | 深入浅出索引(下)-02

【4月更文挑战第16天】B+树索引利用最左前缀原则加速检索,即使只是部分字段匹配也能生效。联合索引[name-age]可按最左字段"张"找到记录,并遍历获取结果。优化索引顺序能减少

探索机器学习中的支持向量机(SVM)算法

【4月更文挑战第19天】在数据科学和人工智能的广阔天地中,支持向量机(SVM)以其卓越的分类能力成为机器学习领域的重要算法之一。本文旨在剖析SVM的核心原理,探讨其高级应用技巧,并通过实例演示如何在复

构筑安全之盾:云计算环境下的网络安全与信息保护策略

【4月更文挑战第19天】随着云计算技术的飞速发展,企业和个人越来越依赖于云服务来存储、处理和交换数据。然而,这种便利性背后隐藏着潜在的安全风险。本文深入探讨了在云计算背景下,如何通过综合性的安全措施和

深入解析PHP的命名空间和自动加载机制

【4月更文挑战第19天】在PHP的编程世界中,命名空间和自动加载机制是两个核心的概念。它们不仅帮助开发者有效地管理代码,还提高了代码的重用性和可维护性。本文将深入探讨这两个概念,并解析它们的工作原理和

滚雪球学Java(16):玩转JavaSE-do-while循环语句:打破传统思维模式

【4月更文挑战第5天】🏆本文收录于「滚雪球学Java」专栏,专业攻坚指数级提升,希望能够助你一臂之力,帮你早日登顶实现财富自由🚀;同时,欢迎大家关注&&收藏&&订阅!持续更新中,up!up!up!